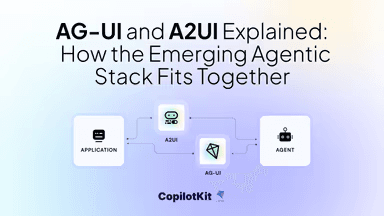

AG-UI and A2UI Explained: How the Emerging Agentic Stack Fits Together

Today it's difficult to keep up with everything that's shaping up in the AI space, but one thing is for sure-it's moving forward at a lightning pace.

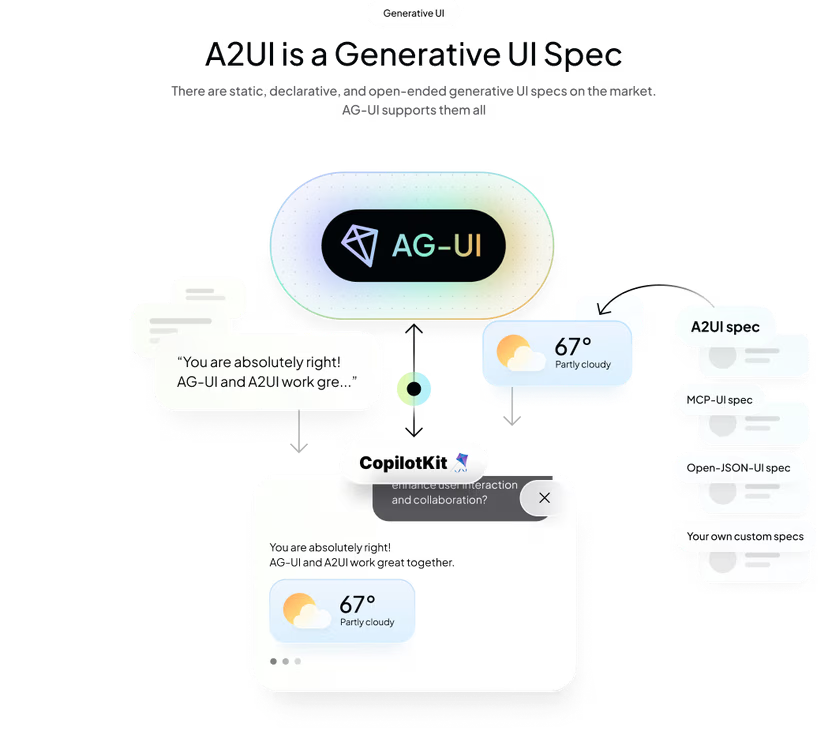

One major highlight is that Google will be releasing a protocol called A2UI. Although AG-UI and A2UI sound similar, they solve completely different, yet highly complementary, problems.

CopilotKit has been working closely with Google on the A2UI protocol, and we’ll be shipping full support when the A2UI spec launches. But before that happens, let’s break down how these pieces fit into the broader agentic landscape.

The Agentic Protocol Ecosystem Is Taking Shape

Across the industry, three open protocols are becoming foundational for agent-driven applications. Each sits at a distinct layer of the stack:

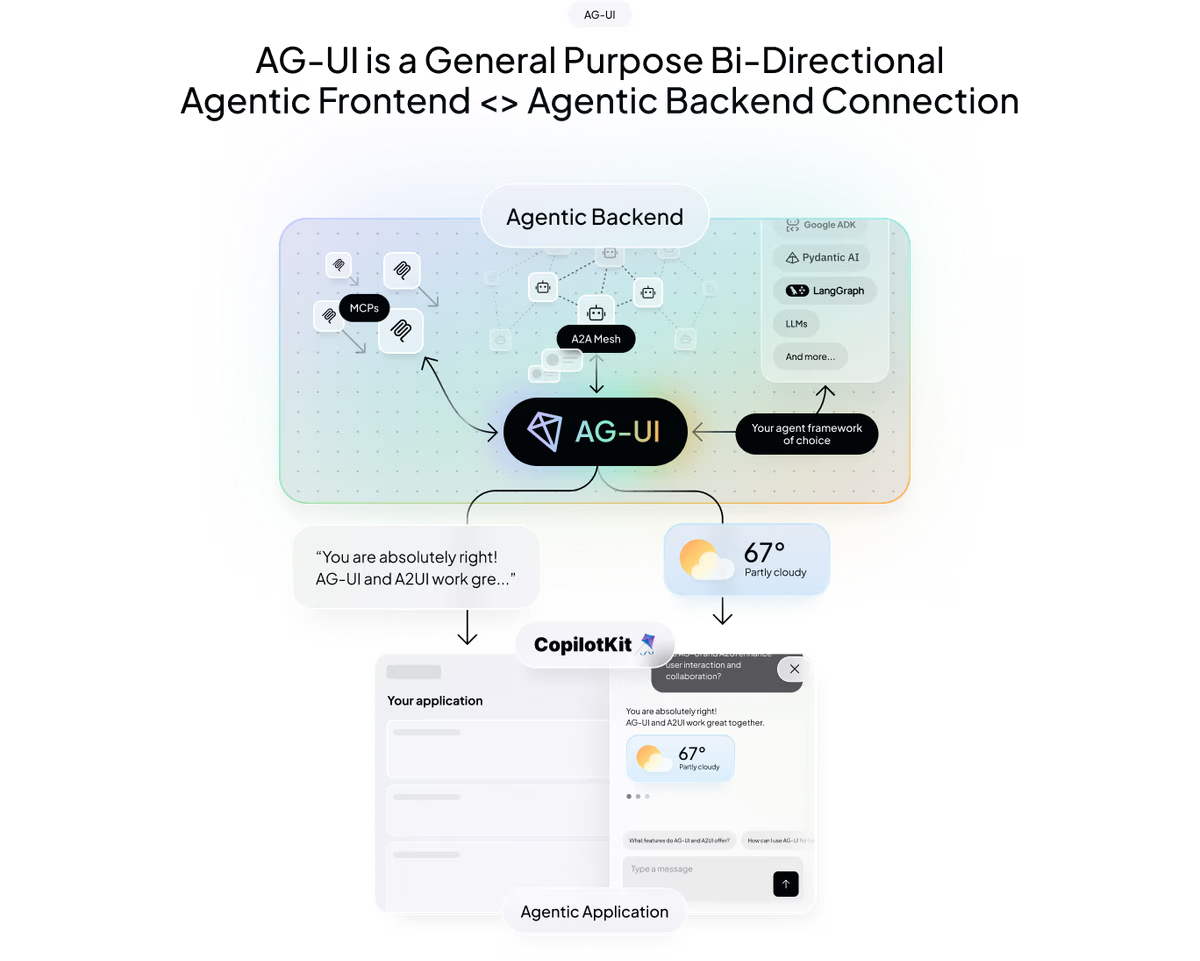

1. AG-UI — Agent ↔ UI Runtime (CopilotKit + ecosystem partners)

The general-purpose, bi-directional runtime connection between an agentic frontend and an agentic backend.

Think of AG-UI as the bridge that moves messages, events, and UI instructions reliably between the two.

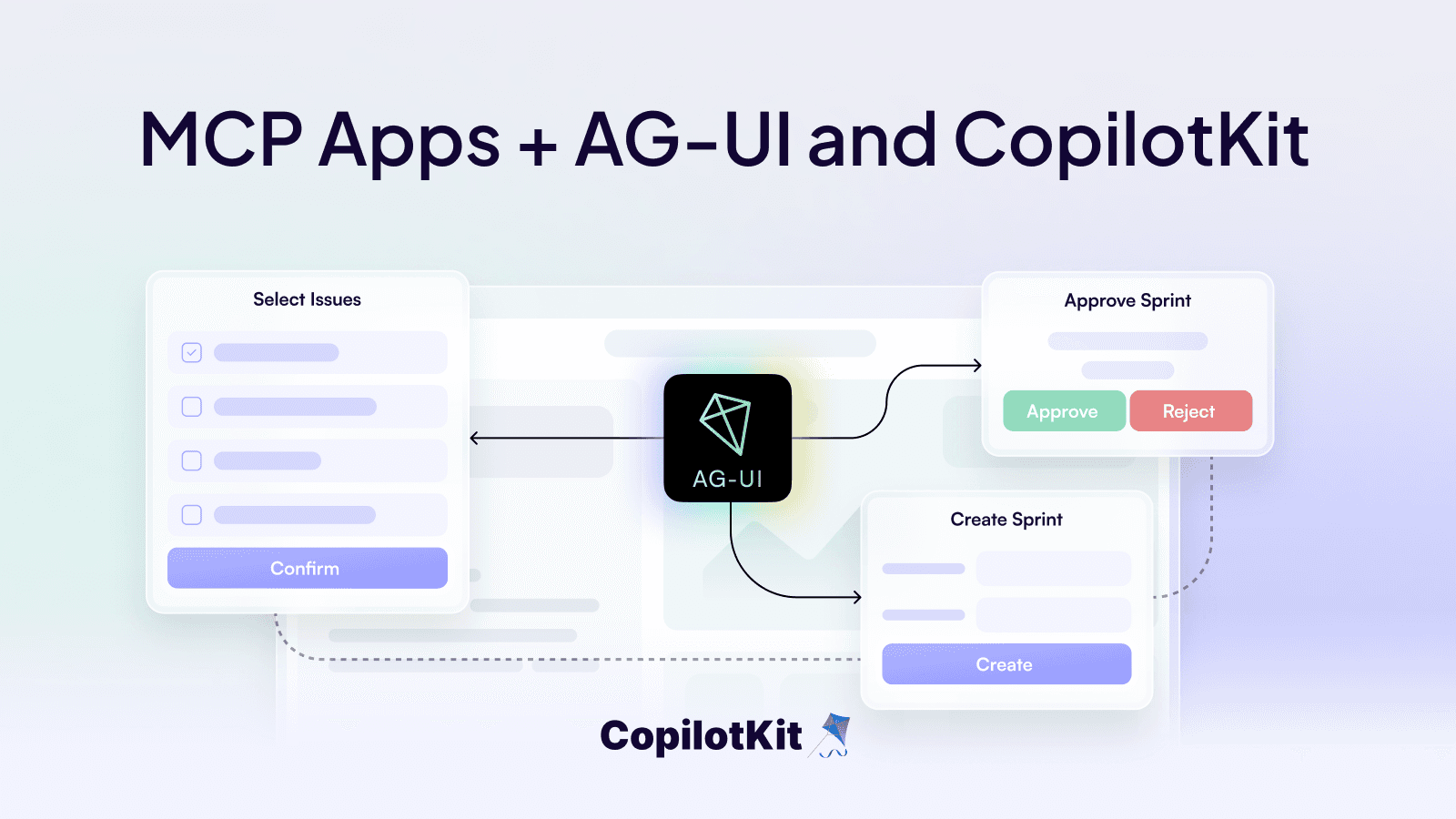

2. MCP — Model Context Protocol (Anthropic)

Originally designed to let agents connect to tools — but those tools are becoming agentic themselves. MCP is now the standard for safely exposing capabilities to agents.

3. A2A — Agent-to-Agent Protocol (Google)

Designed so agents can communicate, delegate, and collaborate with other agents.

Even though these protocols were built by different groups, they form a complementary trio, each solving a different piece of the agentic puzzle.

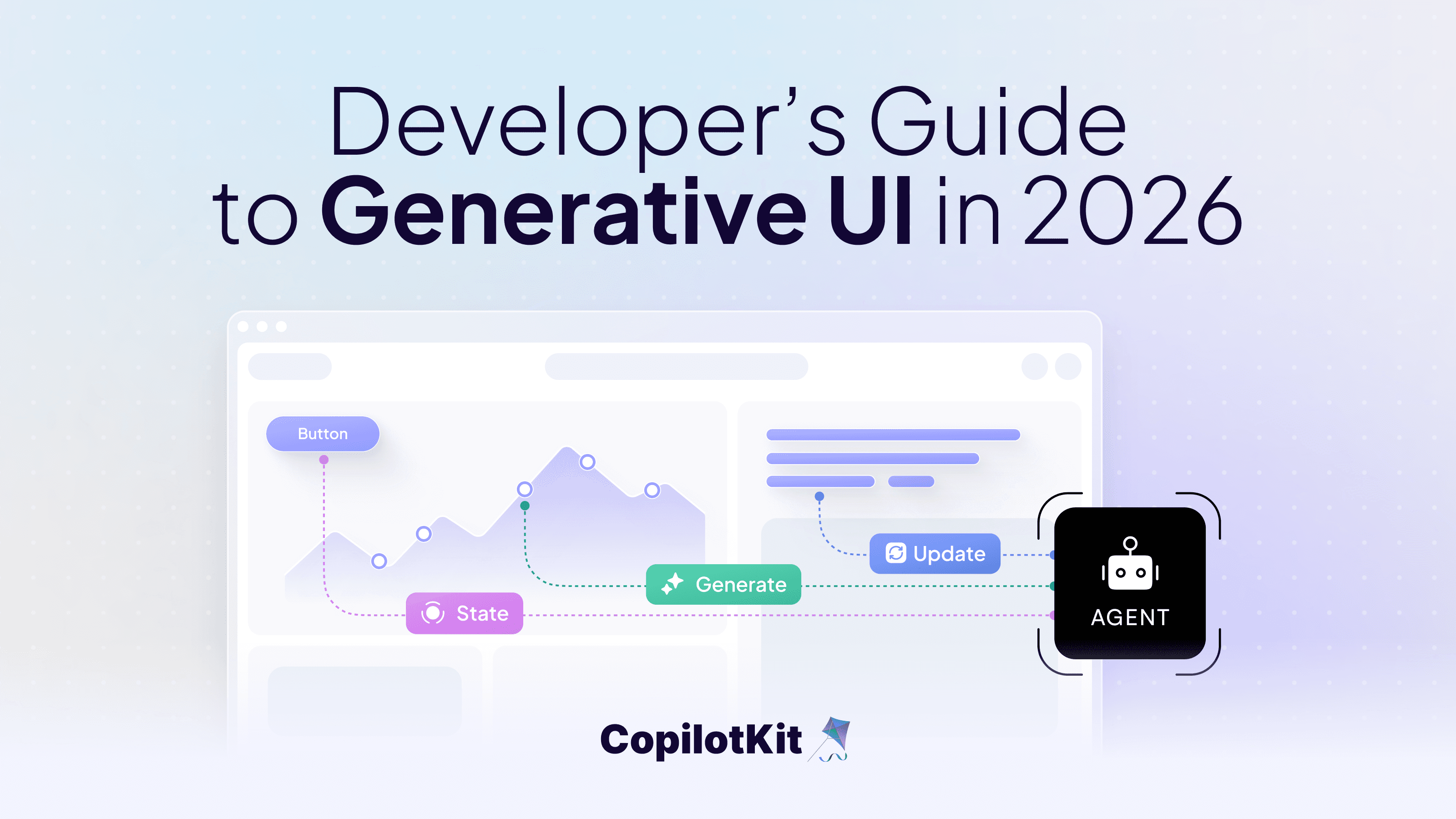

Generative UI Is the New Frontier

Alongside protocols, a new family of Generative UI specifications is emerging. These specs allow agents to return UI - not just text - opening the door to fully dynamic, agent-generated interfaces.

The big ones:

A2UI - Published by Google, declarative generative UI, streaming JSON, and Platform-agnostic.

Open-JSON-UI - OpenAIOpen standardization of OpenAI’s internal declarative UI schema.

MCP-Apps - iframe-based standard for user-facing UIs inside the MCP ecosystem.

AG-UI Is Not a generative UI spec

Instead, AG-UI is the runtime layer that transports generative UI instructions, whether they come from A2UI, Open-JSON-UI, MCP-UI, or a custom format you define yourself.

This distinction is key:

- A2UI / MCP-UI / Open-JSON-UI → What UI the agent wants to show

- AG-UI → How that UI is delivered between backend ↔ frontend

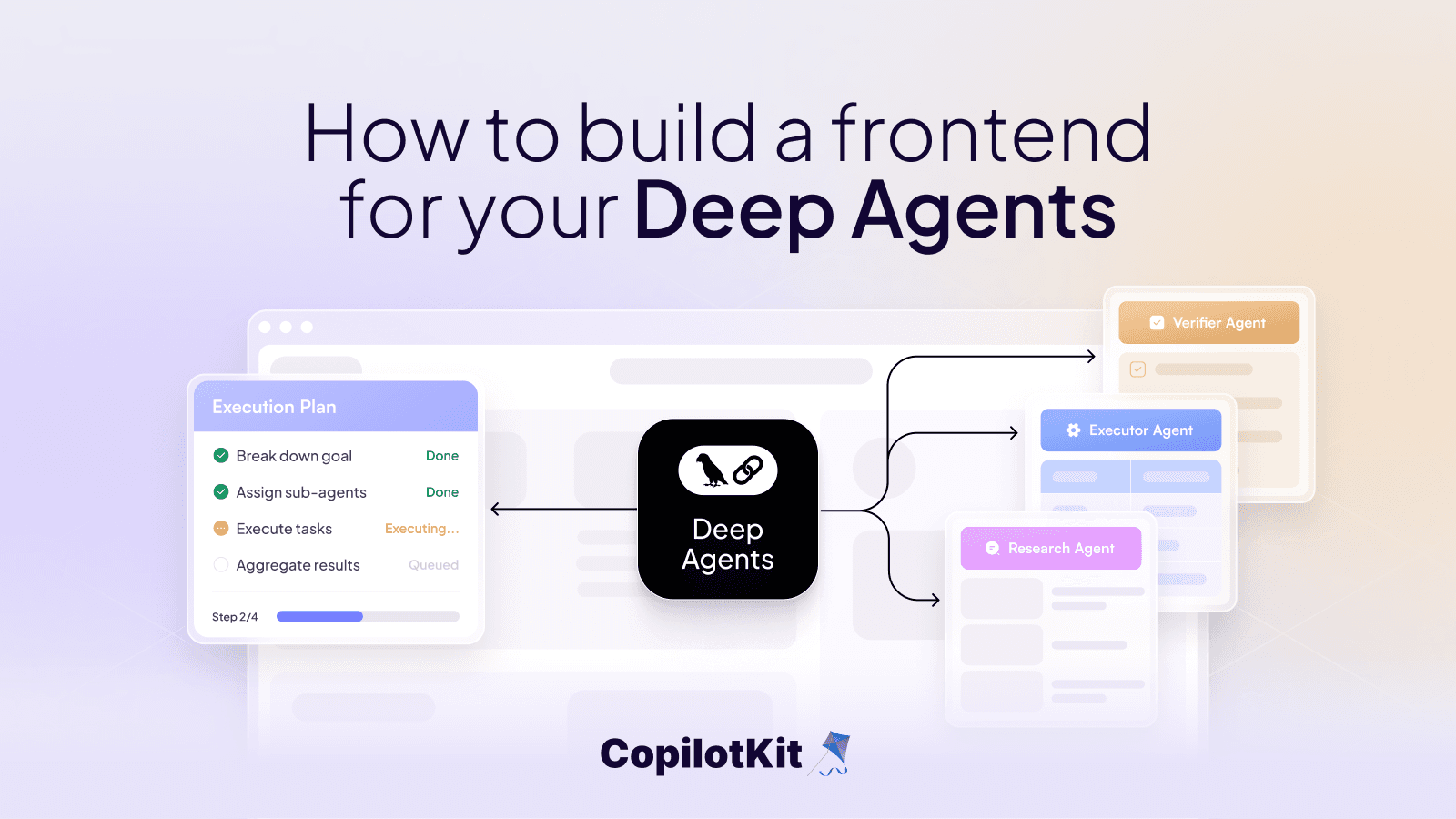

AG-UI Protocol: The Bridge Between Agents and Users

AG-UI’s purpose is simple:

Connect any agentic backend to any agentic frontend - reliably, bidirectionally, and with full event transparency.

AG-UI is pragmatic and built from real-world developer needs. It supports:

- live event streaming

- multi-agent interactions

- long-running agents

- agent reconnection

- full command/event lifecycles

- custom generative UI schemas

And because AG-UI integrates handshakes for MCP and A2A, UI instructions from subagents - even deeply nested ones - can be safely propagated all the way up to the user’s application.

This ecosystem-level interoperability is one reason AG-UI is quickly becoming a foundation for production agentic apps.

Mixing and Matching: The Reality for Developers

The future won’t be one “winner.”

Developers will mix:

- AG-UI for frontend ↔ backend runtime

- MCP for tools

- A2A for orchestrating subagents

- A2UI (or other generative UI specs) for declarative UI

- Custom schemas for special use-cases

CopilotKit embraces that future.

Our framework lets developers connect to any of these protocols or specs - individually or together. Whether your application uses agents that speak MCP, A2A, or a custom internal protocol, AG-UI ensures everything plays nicely inside a unified frontend experience.

CopilotKit: The Agentic Application Framework

AG-UI, MCP, and A2A solve different layers, but developers still need a higher-level framework that ties everything together.

That’s where CopilotKit sits:

- above the protocols

- above the generative UI specs

- providing the full stack for building real agentic applications

CopilotKit gives developers:

- agent runtime + orchestration

- frontend components

- backend integrations

- observability

- security & policy enforcement

- production infra

- cloud-hosted experience or open-source self-hosting

In other words:

CopilotKit is to agentic apps what React was to component-based UIs - a unified, opinionated layer that abstracts fragmentation while staying open to the ecosystem.

And with full A2UI support landing soon, CopilotKit apps will be able to natively render declarative UI generated by agents, alongside everything else AG-UI already supports.

Even though AG-UI and A2UI sound similar, they solve completely different - and highly complementary - problems:

- A2UI defines what UI the agent wants to display.

- AG-UI defines how the UI (and everything else) flows between the backend and the frontend.

Together with MCP and A2A, these pieces form the foundation of the next-generation agentic application stack — one that is interoperable, open, and rapidly maturing.

And CopilotKit is committed to supporting the entire ecosystem, not replacing it.

Happy building!