In the past two years, powerful backend agents have entered the market. Tools like GitHub Copilot, Cursor, and Notion AI are already helping professionals write code faster, brainstorm content, and automate work. These are user-facing AI agents that collaborate with people in real time.

GitHub Copilot, for example, is an AI coding assistant that suggests code as you type. Remarkably, AI agents are moving into production use at major companies, but those early deployments still ran mostly in the background (batch jobs, nightly reports). In other words, many first-generation AI systems were powerful automation engines, but they lacked a two-way conversation with users.

AI agents are continuously getting smarter and can work on complex tasks (data migrations, report generation, ticket triage, etc.). These agents boost productivity, but they traditionally run invisibly: an agent would crank through data and output a result without ever asking the user a question or showing intermediate steps.

At CopilotKit, we saw that gap as a last-mile problem.

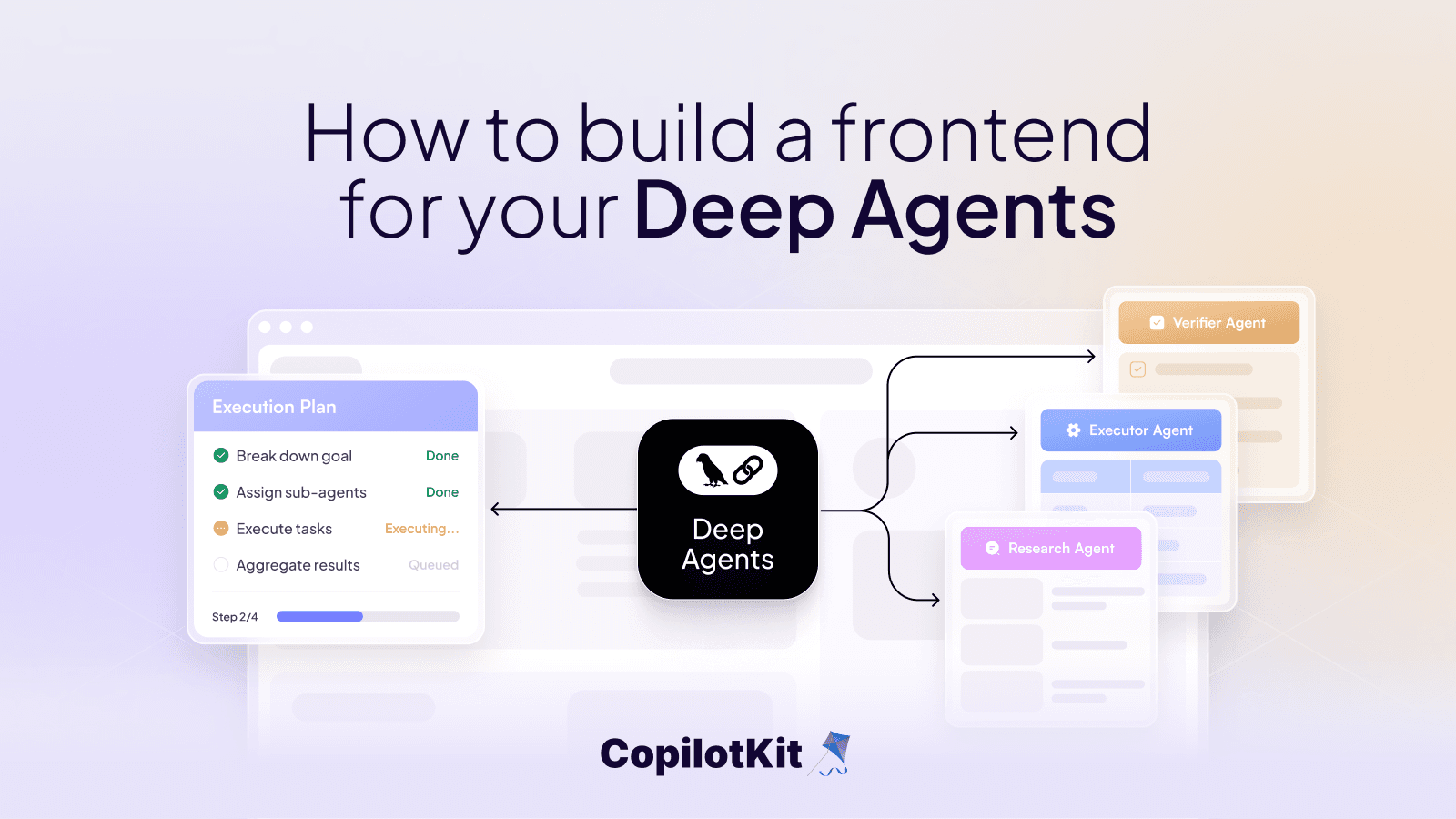

For many of the most important use-cases, Agents are helpful if they can work alongside users. This means users can see what the agent is doing, can co-work on the same output, and easily iterate together in a shared workspace.

**But up to this point, teams tried to fill this gap with one-off code. One project might wire up a WebSocket to stream tokens, another might poll an API with JSON patches. The result was brittle: each UI integration was custom and hard to replicate. In fact, the agent community observed that the agent-to-UI layer had become a patchwork of custom WebSocket formats, JSON hacks, and prompt engineering tricks.

No two agents spoke the same language to their user interfaces, so every integration felt like reinventing the wheel. We knew there had to be a better way, a standard interface for interactive AI agents.

Introducing AG-UI: A Universal Agent–UI Protocol

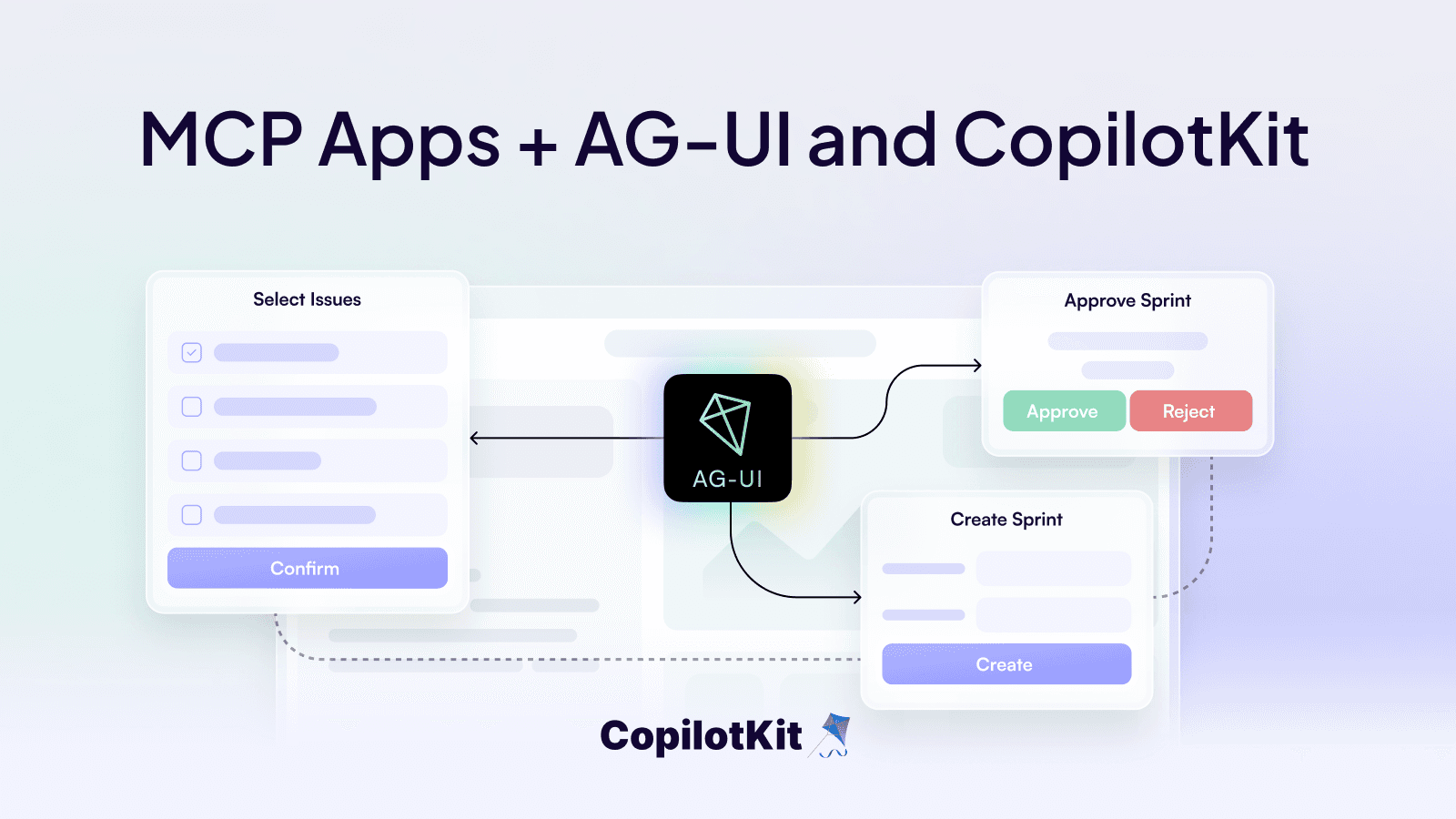

AG-UI Pattern

That’s why we created AG-UI (Agent-User Interaction). AG-UI is an open, lightweight protocol that defines a standard bridge between any AI agent on the back end and any UI on the front end.

Think of it as a universal translator: no matter what tools or models an agent uses, AG-UI provides a common, structured stream of messages so the interface always knows what’s going on. In fact, the specification itself calls AG-UI “an open, lightweight protocol” for streaming JSON events between agents and interfaces.

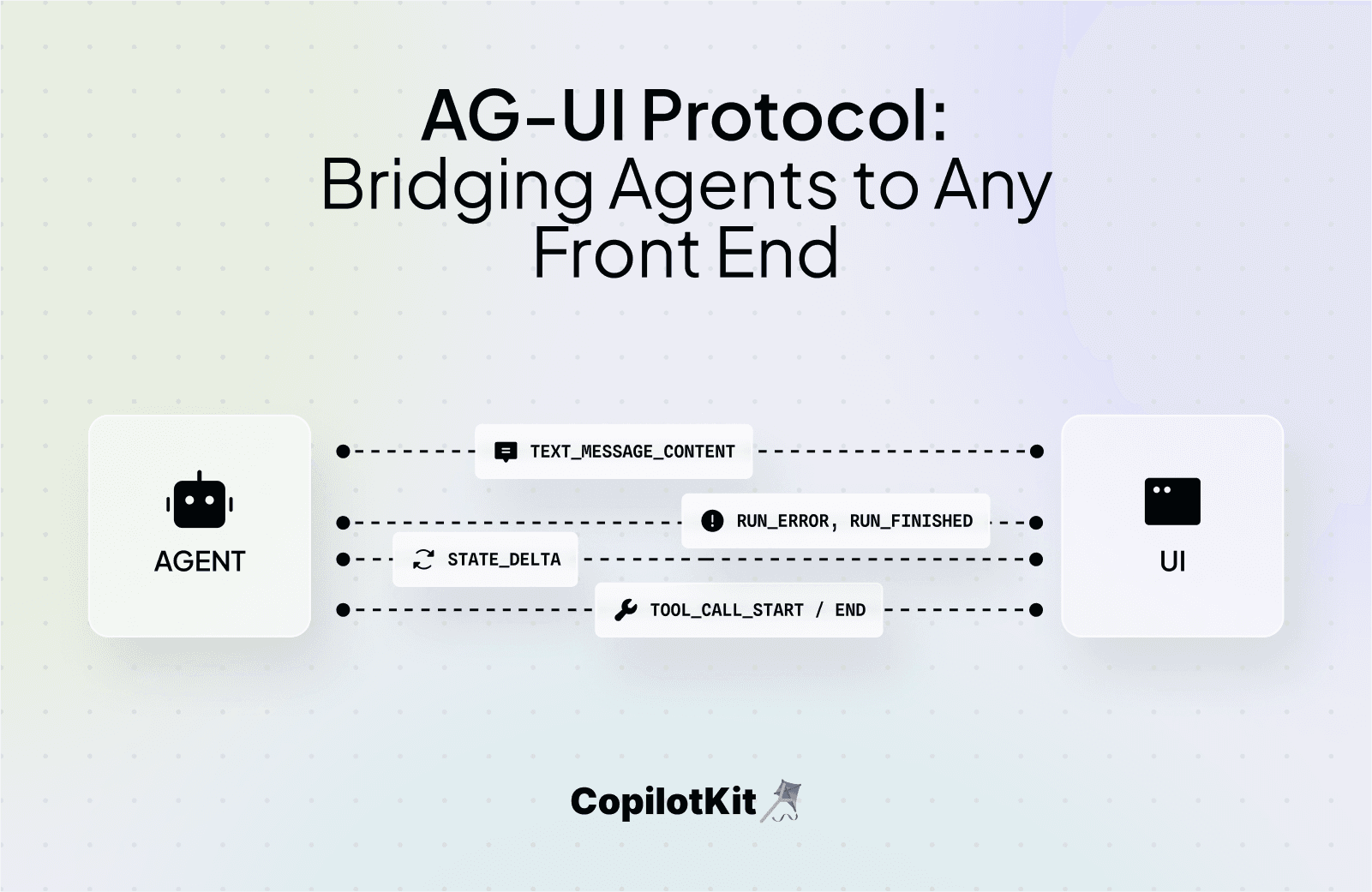

How does it work in plain terms? The front-end application sends the agent a single HTTP POST request (carrying the user’s prompt or current state) and then listens to a Server-Sent Events (SSE) stream from that endpoint. As the agent runs, it emits typed events that describe its actions.

For example, when the agent produces words, it sends a TEXT_MESSAGE_CONTENT event with that snippet of reply; when it calls an external function or API, it emits TOOL_CALL_START and TOOL_CALL_END; when it updates the application state, it sends a STATE_DELTA patch.

The front end simply parses each JSON event and reacts. Text events append words to a chat or editor; tool-call events show results when ready; state-delta events update tables or UI widgets.

This makes the experience feel live and fluid: users see answers growing token by token and visualizations or data changing as soon as the agent produces them. The agent can even pause for user input mid-conversation, since AG-UI includes session and cancellation events to handle those handoffs. In short, AG-UI turns a single web endpoint into a rich, bidirectional interface.

Figure: Depiction of AI data flows between agents and interfaces. AG-UI streams structured events over HTTP/SSE so front ends and back ends stay in sync.

AG-UI Supports

Out of the box, AG-UI covers all the core needs of an interactive agent:

- Live streaming output: as soon as the agent produces tokens, they flow to the UI in real time.

- Tool-call updates: when the agent invokes an external API or function, it can immediately send progress or partial results.

- Incremental state diffs:

STATE_DELTAevents carry only what changed (e.g. new rows in a table or edits in a document), so the UI can merge updates efficiently. - Error and lifecycle events: timeouts, errors, or “done” signals are explicit events, letting the interface handle them gracefully without guesswork.

- Multi-agent handoffs: if one agent finishes a task and another should start, the transition can be streamed on the same channel, keeping the user informed at each step.

Each event type has a well-defined JSON schema, so handling them is straightforward. For instance, a STATE_DELTA always has a clearly specified format, eliminating the need for brittle string parsing. This structured approach also makes AG-UI highly debuggable: teams can log the full event stream and even replay a session end-to-end to see exactly how the agent responded at each step.

What AG-UI Enables

By standardizing the agent–UI contract, AG-UI unlocks several key benefits:

- Interchangeable frontends: You can swap UIs freely. The same chat widget or dashboard can work with any AG-UI-compliant agent.

- Backend flexibility: Change models or toolsets without touching the UI. For example, you can switch between cloud LLMs (GPT-4) and local models (LLaMA) with no frontend changes.

- Multi-agent workflows: Coordinate specialized agents under one interface. A data analyst’s assistant could query one agent for insights and another for charts, all within the same dashboard.

- Faster development: Teams focus on experience, not plumbing. There’s no custom socket code or ad-hoc JSON to write – just implement the protocol and let reuse happen naturally.

These capabilities turn every new AI feature into a composable component. With AG-UI this eliminates custom WebSocket formats and text parsing hacks, establishing a single contract between agents and apps.

How AG-UI Works (In Plain Terms)

In practice, integrating AG-UI feels surprisingly simple. From the front-end perspective, your app basically does this:

- Send the prompt: POST the user’s input to the agent.

- Listen to events: Open an SSE listener on the same endpoint.

- Handle incoming events: On each JSON event, look at its

type. For example:- If it’s

TEXT_MESSAGE_CONTENT, append that text to the chat window or editor. - If it’s

TOOL_CALL_START, display a “running…” indicator. - If it’s

STATE_DELTA, apply the provided changes to your local data (add rows, update text, etc.). - If it’s

RUN_ERRORorRUN_FINISHED, conclude the interaction or notify the user.

- If it’s

That’s it! All the UI logic lives in these event handlers. The user sees responses as they arrive, with the interface updating live. Users can even interrupt or refine requests mid-stream (for example by pressing a “Cancel” button). AG-UI’s thread_Ids make sure the server can handle those interrupts correctly.

Best of all, AG-UI is built on plain web tech. Server-Sent Events (SSE) is just an HTTP streaming response with no special protocols needed. We chose HTTP+SSE so it works through standard infrastructure (firewalls, proxies, CDNs) out of the box. In fact, the AG-UI spec itself allows other transports (WebSockets or binary streams), but in our experience the simple HTTP/SSE setup covers 99% of use cases. In short, adopting AG-UI often feels like adding a small event loop to your app, rather than architecting an entirely new system.

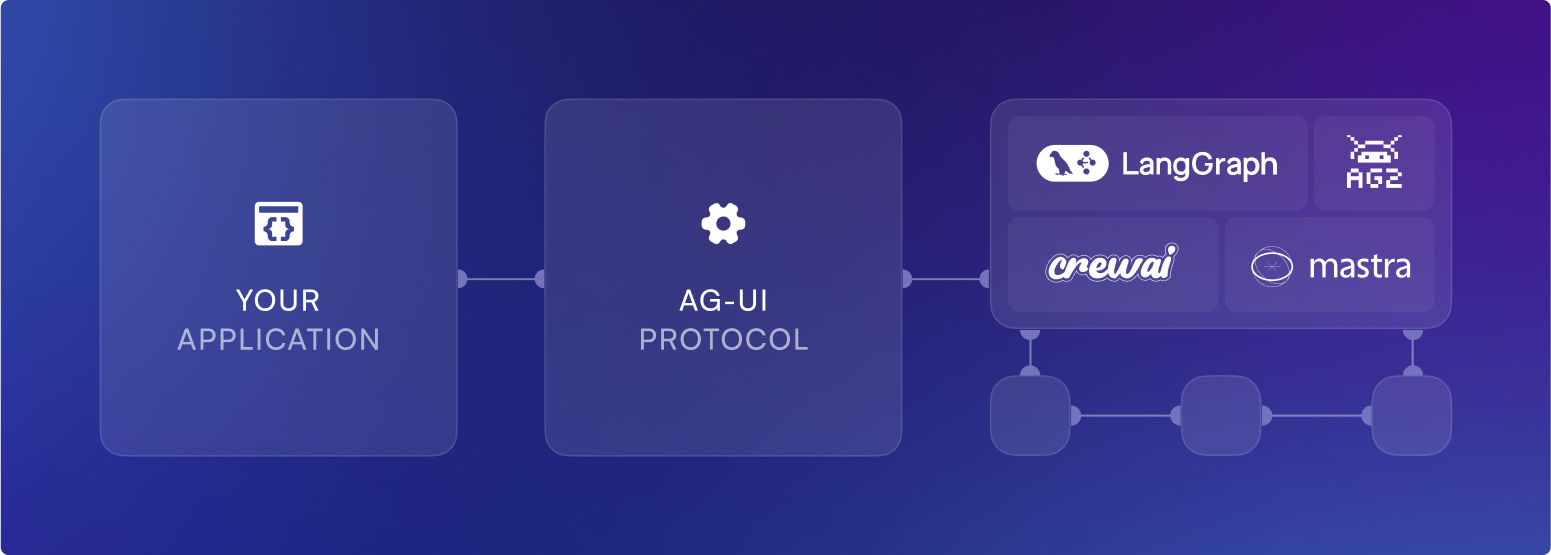

AG-UI in the Agent Protocol Stack

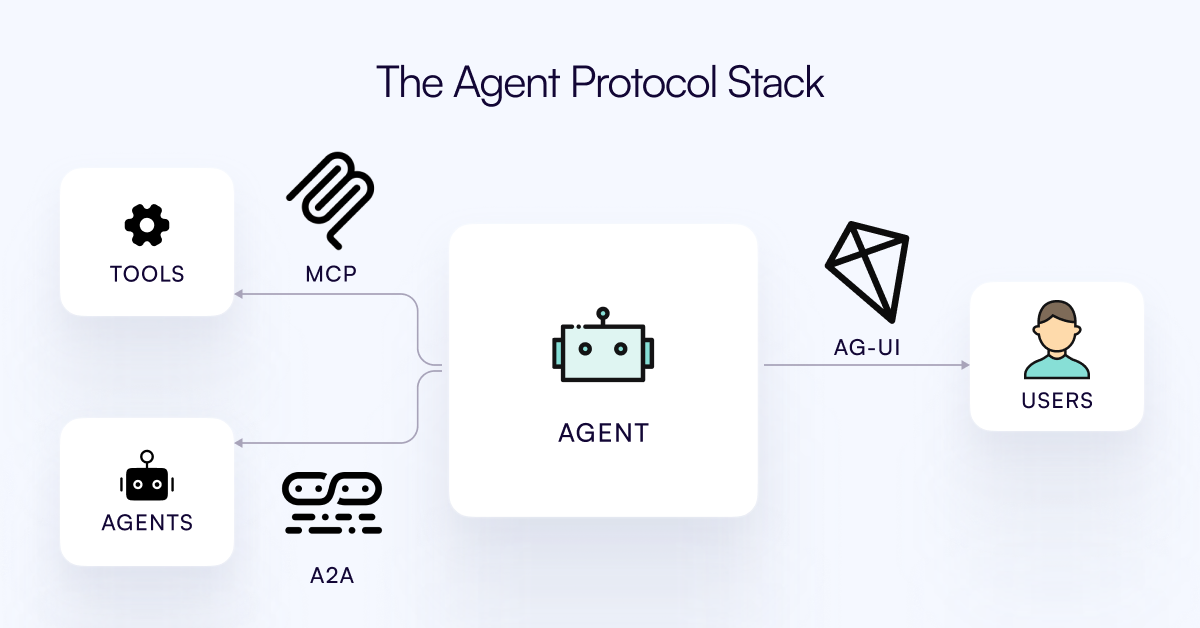

To put AG-UI in context, today’s agent stack architecture has three complementary layers:

- Model Context Protocol (MCP) handles agent-to-tool interactions (how agents query databases, APIs, or custom functions).

- Agent-to-Agent (A2A) protocols manage collaboration among multiple AI agents.

- AG-UI is the third pillar: it sits on top as the human-in-the-loop layer. These layers do not conflict – they stack.

For example, imagine a help desk agent that uses MCP to pull customer data, spins up a specialized sub-agent via A2A for case categorization, and talks to the user through AG-UI.

In that workflow, AG-UI simply streams chat messages and UI updates, while the agent uses MCP/A2A behind the scenes. The same agent may communicate with another agent via A2A while communicating with the user via AG-UI, and while calling tools provided by an MCP server.

In other words, MCP covers the data/tools layer, A2A covers the inter-agent layer, and AG-UI covers the interface layer.

Because each protocol has a distinct role, you can mix and match freely. Swap out the LLM, database, or frontend UI without breaking the others. We even provide middleware layers so existing agent frameworks can plug into AG-UI with minimal changes. The result is a clean, modular stack where AG-UI is clearly the presentation (UI) tier of the agent system.

Real-World Agents with User Interfaces

The best way to see AG-UI’s impact is to look at live examples. GitHub Copilot is essentially an AG-UI scenario: as you type code, an AI agent streams suggestions into the editor.

Similarly, Cursor is an AI coding platform where the assistant co-writes your code in real time.

In content apps, Notion AI sits in a document editor, sending completions and summaries back into your notes. In each case, an agent on a server is communicating through a custom interface.

AG-UI doesn’t replace those products, but it makes building that class of experience far easier for any team. For instance, with AG-UI you could add a Copilot-like assistant to a data analytics dashboard or a support portal without stitching together low-level sockets.

One real use-case we’ve seen is an analytics agent: a LangGraph-powered planner streams its charting plan to a React dashboard, and a follow-up agent fetches the data and all updates appearing live to the user. None of that required reinventing the wheel.

On the agent side, many systems now support AG-UI. For example, LangGraph workflows can emit AG-UI events at each step, CrewAI agents can stream updates through AG-UI, and even typed-agent frameworks like Mastra can use it natively. The goal is always the same: no matter how the backend is built, any front end can listen to its AG-UI events and provide a smooth experience.

The Future: Co-Working AI and Humans

AG-UI is not just a protocol specification, it’s a step toward a new paradigm of co-working assistant. By making agents’ actions visible and interactive, it opens up applications we could only imagine. Analysts could query a database conversationally and see charts update live.

Designers could sketch and have an AI agent iteratively refine the mockup on the spot. Educators could get AI tutors that walk through problems step by step with students. In all these cases, the agent isn’t a black box but a partner in the interface.

Our co-founder Atai Barkai captured it well: “AG-UI isn’t just a technical specification, it’s the foundation for the next generation of AI-enhanced applications where humans and agents seamlessly collaborate”.

Practically, this means developers spend time on creative UX instead of duct-taping integrations. It also means no more vendor lock-in: you can switch languages or models freely, as long as they speak AG-UI.

AG-UI is fully open-source and self-hosted-friendly, so organizations retain control of their data. It works with enterprise needs (CORS, auth tokens, audit logging) out of the box. Teams can get started in minutes using our SDKs and demo apps. In fact, the documentation includes an interactive playground so you can try AG-UI right now.

In short, AG-UI delivers the long-missing “last mile” for AI agents. As generative AI grows more sophisticated, clean, extensible interfaces to users become critical.

By releasing AG-UI, we’re empowering teams to build the next wave of collaborative AI assistants. We encourage developers to explore AG-UI today (see the docs and examples at docs.ag-ui.com) and join us in building the future of AI-powered software. The vision is clear: humans and AI, working side by side, each amplifying the other’s strengths.

Want to learn more?

- Book a call and connect with our team

- Please tell us who you are --> what you're building, --> company size in the meeting description, and we'll help you get started today!

How Can I Support AG-UI?

If you love open source, please star the AG-UI GitHub and join our vibrant Discord community of developers

Happy Building!