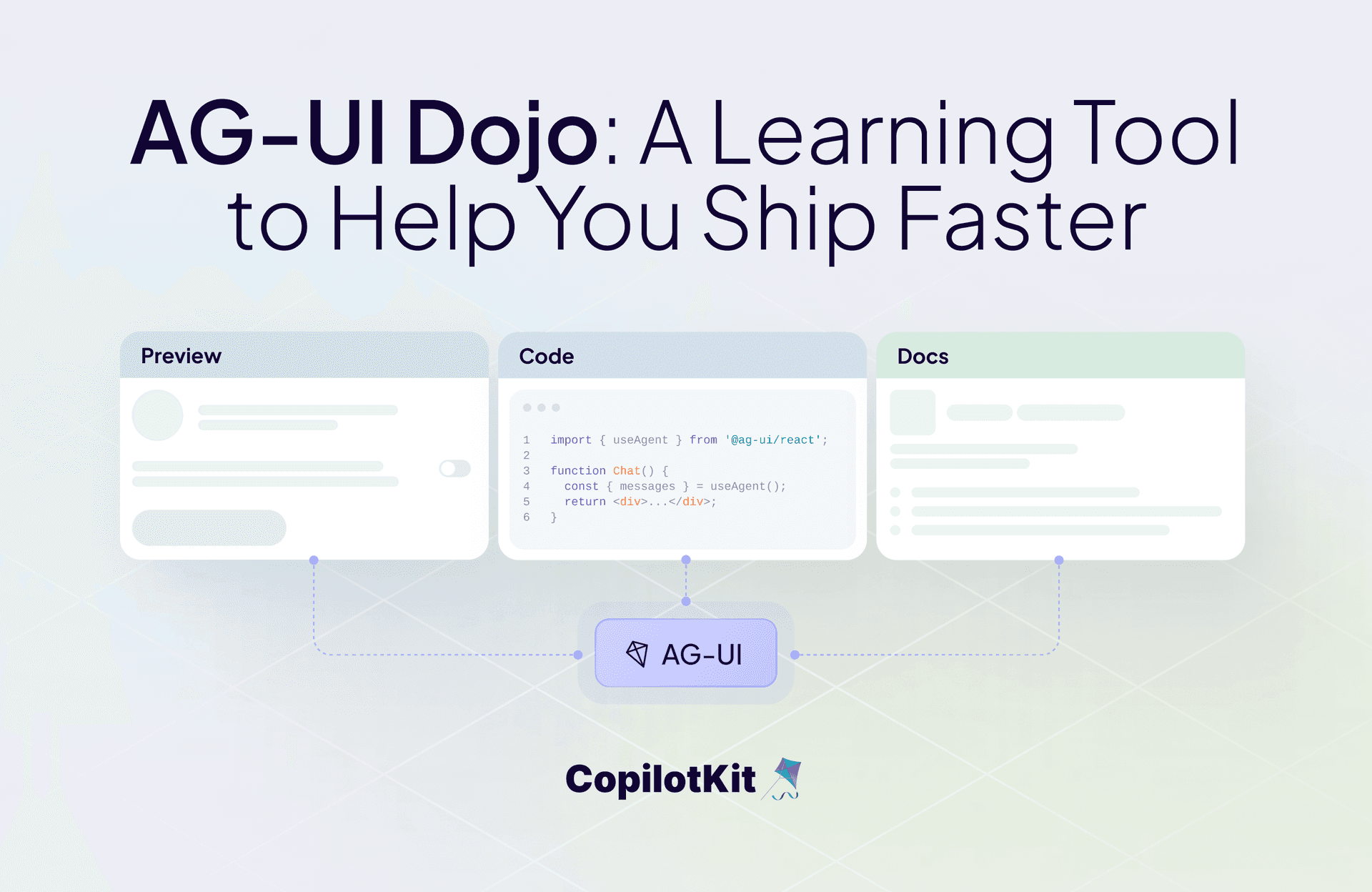

Introducing the AG-UI Dojo: A Learning Tool to Help You Ship Faster

Building AI agent frontends? You've probably hit the usual roadblocks: streaming updates that break, state bugs that make no sense, and tool calls that require way too much setup.

The AG-UI Dojo fixes that problem. It's a collection of working examples you can actually visualize and use.

What Makes Up the Dojo?

We built this with three parts:

- Preview: Run the demo. See how it actually behaves.

- Code: Check out the implementation. No hunting through repos.

- Docs: Official specs and guides, right next to the code.

You can visualize it, understand it, and then use it. Our goal was to make it simple for developers who just want to see something working fast.

What the Demos Cover

Six core features, in its own "hello world" style examples:

- Agentic Chat: Streaming chat with tool hooks built in

- Human-in-the-Loop: Agent planning that waits for user input

- Agentic Generative UI: Long tasks with UI that updates as you go

- Tool-Based Generative UI: A haiku generator that renders images nicely in the chat

- Shared State: Agent and UI stay perfectly synced

- Predictive State Updates: Real-time collaboration with the agent

Each demo shows one building block. Put them together, and you get real agent apps.

A Learning Tool

The Dojo works as both a learning tool and a debugging resource. Our documentation calls it "learning-first" - you walk through each capability and see the code that makes it work.

It's also your implementation checklist for building new integrations. Run through the demos, and you'll know your AG-UI setup handles everything properly.

Common problems: event ordering, payload issues, and state sync bugs.

You can troubleshoot all of that here before you ship.

Faster Development

Three things:

- Faster learning - see the code working, not just sitting in documentation

- Less complexity - bite-sized demos instead of overwhelming examples

- Better debugging - validate your setup before production

Getting Started

Try the Dojo. Click through the previews, check the code tabs, and read the docs.

Use it when you're building your own agent UIs. These demos work as reference implementations and testing grounds for your integration. Check out the Dojo source code here.

We’d love to know how this tool has helped your team. Reach out to book a meeting; we take your feedback seriously.

Want to learn more?

- Book a call and connect with our team

- Please tell us who you are --> what you're building, --> company size in the meeting description

Happy Building!