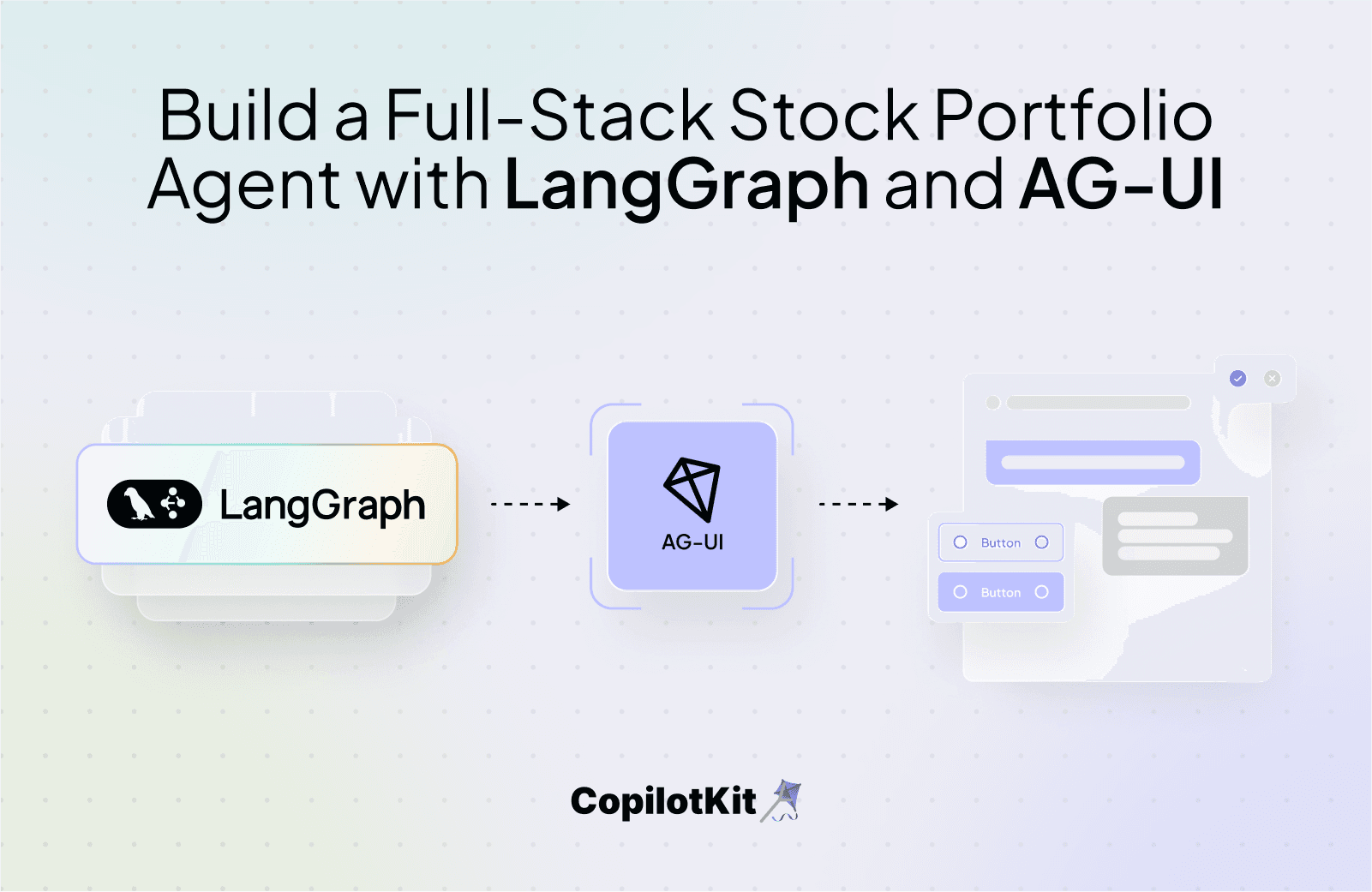

In this guide, you will learn how to integrate a LangGraph agent with AG-UI protocol. Also, we will cover how to integrate the AG-UI + LangGraph agent with CopilotKit in order to chat with the agent and stream its responses in the frontend.

Before we jump in, here is what we will cover:

- What is AG-UI protocol?

- Integrating LangGraph agents with AG-UI protocol

- Integrating a frontend to the AG-UI + LangGraph agent using CopilotKit

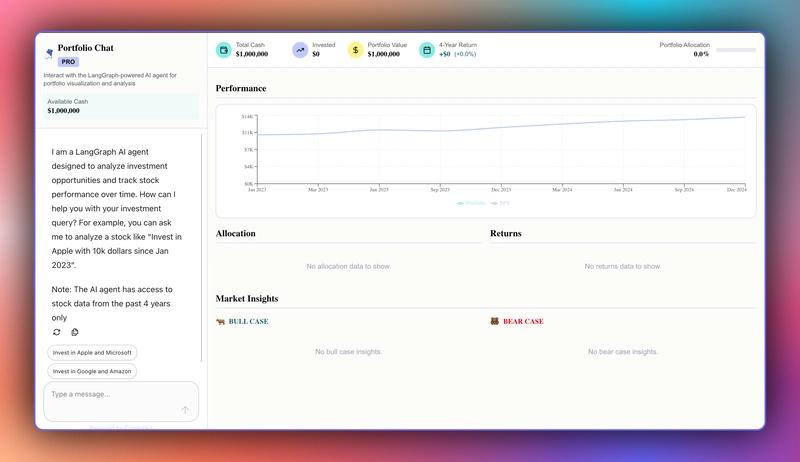

Here’s a preview of what we will be building:

What is AG-UI protocol?

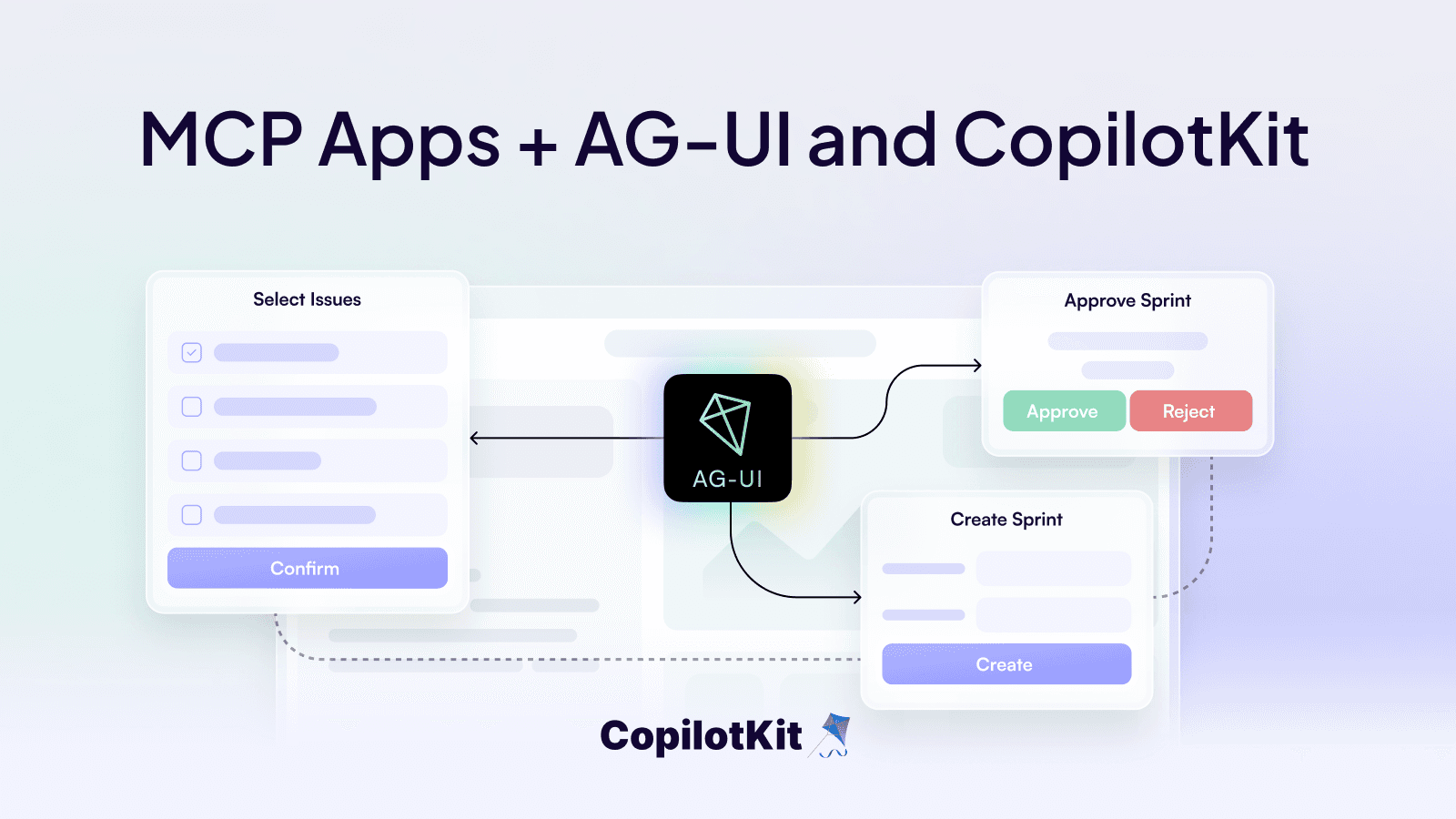

The Agent User Interaction Protocol (AG-UI), developed by CopilotKit, is an open-source, lightweight, event-based protocol that facilitates rich, real-time interactions between the frontend and AI agents.

The AG-UI protocol enables event-driven communication, state management, tool usage, and streaming AI agent responses.

To send information between the frontend and your AI agent, AG-UI uses events such as:

- Lifecycle events: These events mark the start or end of an agent’s task execution. Lifecycle events include

RUN_STARTEDandRUN_FINISHEDevents. - Text message events: These events handle streaming agent responses to the frontend. Text message events include

TEXT_MESSAGE_START,TEXT_MESSAGE_CONTENT, andTEXT_MESSAGE_ENDevents. - Tool call events: These events manage the agent’s tool executions. Tool call events include

TOOL_CALL_START,TOOL_CALL_ARGS, andTOOL_CALL_ENDevents. - State management events: These events keep the frontend and the AI agent state in sync. State management events include

STATE_SNAPSHOTandSTATE_DELTAevents.

You can learn more about the AG-UI protocol and its architecture here on AG-UI docs.

Now that we have learned what the AG-UI protocol is, let us see how to integrate it with the LlamaIndex agent framework.

Let’s get started!

Want to learn more?

- Book a call and connect with our team

- Please tell us who you are --> what you're building, --> company size in the meeting description

Prerequisites

To fully understand this tutorial, you need to have a basic understanding of React or Next.js.

We'll also make use of the following:

- Python - a popular programming language for building AI agents with LangGraph; make sure it is installed on your computer.

- LangGraph - a framework for creating and deploying AI agents. It also helps to define the control flows and actions to be performed by the agent.

- Google Gemini API Key - an API key to enable us to perform various tasks using the Gemini 2.5 Pro model.

- CopilotKit - an open-source copilot framework for building custom AI chatbots, in-app AI agents, and text areas.

Integrating LangGraph agents with AG-UI protocol

To get started, clone the Open AG UI Demo repository that consists of a Python-based backend (agent) and a Next.js frontend (frontend).

Next, navigate to the backend directory:

cd agentThen install the dependencies using Poetry:

poetry installAfter that, create a .env file with Google Gemini API Key API key:

GOOGLE_API_KEY=your-gemini-key-hereThen run the agent using the command below:

poetry run python main.pyTo test the AG-UI LangGraph integration, run the curl command below on https://reqbin.com/curl.

curl -X POST "http://localhost:8000/langgraph-agent" \

-H "Content-Type: application/json" \

-d '{

"thread_id": "test_thread_123",

"run_id": "test_run_456",

"messages": [

{

"id": "msg_1",

"role": "user",

"content": "Analyze AAPL stock with a $10000 investment from 2023-01-01"

}

],

"tools": [],

"context": [],

"forwarded_props": {},

"state": {}

}'Let us now see how to integrate AG-UI protocol with LangGraph AI agents framework.

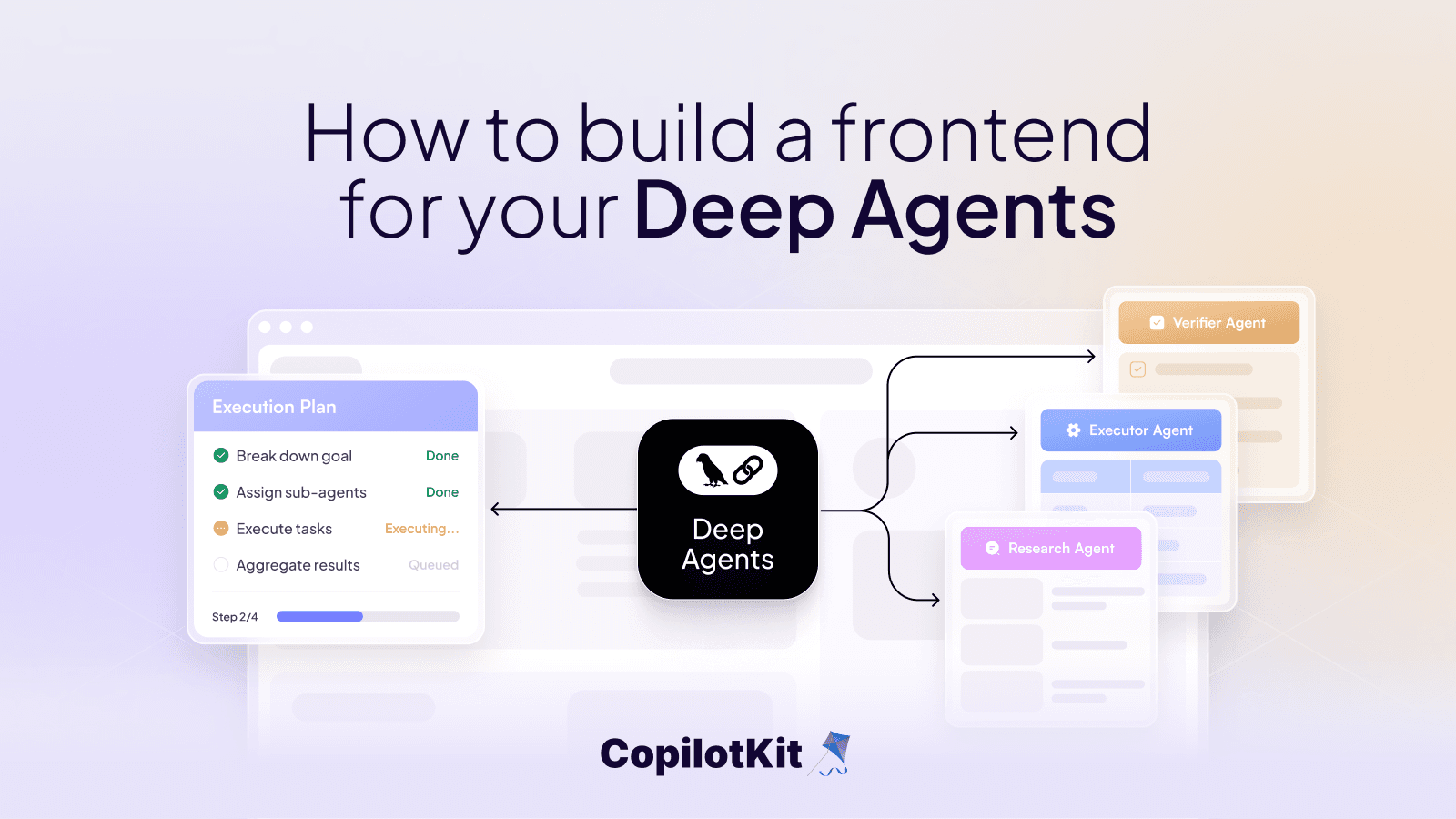

Step 1: Define your LangGraph agent workflow

To integrate AG-UI protocol with LangGraph AI agents framework, define your LangGraph agent nodes and workflow as shown in the agent/stock_analysis.py file.

// ...

async def agent_graph():

"""

Creates and configures the LangGraph workflow for stock analysis.

This function:

1. Creates a StateGraph with the AgentState structure

2. Adds all workflow nodes (chat, simulation, cash_allocation, insights, end)

3. Defines the workflow edges and conditional routing

4. Sets entry and exit points

5. Compiles the graph for execution

Returns:

CompiledStateGraph: The compiled workflow ready for execution

"""

# Step 1: Create the workflow graph with AgentState structure

workflow = StateGraph(AgentState)

# Step 2: Add all nodes to the workflow

workflow.add_node("chat", chat_node) # Initial chat and parameter extraction

workflow.add_node("simulation", simulation_node) # Stock data gathering

workflow.add_node("cash_allocation", cash_allocation_node) # Investment simulation and analysis

workflow.add_node("insights", insights_node) # AI-generated insights

workflow.add_node("end", end_node) # Terminal node

# Step 3: Set workflow entry and exit points

workflow.set_entry_point("chat") # Always start with chat node

workflow.set_finish_point("end") # Always end with end node

# Step 4: Define workflow edges and routing logic

workflow.add_edge(START, "chat") # Entry: START -> chat

workflow.add_conditional_edges("chat", router_function1) # Conditional: chat -> (simulation|end)

workflow.add_edge("simulation", "cash_allocation") # Direct: simulation -> cash_allocation

workflow.add_edge("cash_allocation", "insights") # Direct: cash_allocation -> insights

workflow.add_edge("insights", "end") # Direct: insights -> end

workflow.add_edge("end", END) # Exit: end -> END

# Step 5: Compile the workflow graph

# Note: Memory persistence is commented out for simplicity

# from langgraph.checkpoint.memory import MemorySaver

# graph = workflow.compile(MemorySaver())

graph = workflow.compile()

return graph</span>Step 2: Create an endpoint with FastAPI

Once you have defined your LangGraph agent nodes and workflow, create a FastAPI endpoint and import the LangGraph workflow as shown in the agent/main.py file.

# Import necessary libraries and modules

from fastapi import FastAPI

from fastapi.responses import StreamingResponse # For streaming server-sent events

import uuid # For generating unique message IDs

from typing import Any # For type hints

import os # For environment variables

import uvicorn # ASGI server for running the FastAPI app

import asyncio # For asynchronous programming

# Import AG UI core components for event-driven communication

from ag_ui.core import (

RunAgentInput, # Input data structure for agent runs

StateSnapshotEvent, # Event for capturing state snapshots

EventType, # Enumeration of all event types

RunStartedEvent, # Event to signal run start

RunFinishedEvent, # Event to signal run completion

TextMessageStartEvent, # Event to start text message streaming

TextMessageEndEvent, # Event to end text message streaming

TextMessageContentEvent, # Event for text message content chunks

ToolCallStartEvent, # Event to start tool call

ToolCallEndEvent, # Event to end tool call

ToolCallArgsEvent, # Event for tool call arguments

StateDeltaEvent # Event for state changes

)

from ag_ui.encoder import EventEncoder # Encoder for converting events to SSE format

from stock_analysis import agent_graph # Import the LangGraph agent

from copilotkit import CopilotKitState # Base state class from CopilotKit

# Initialize FastAPI application instance

app = FastAPI()

@app.post("/langgraph-agent")

async def langgraph_agent_endpoint(input_data: RunAgentInput):

"""

Main endpoint that processes agent requests and streams back events.

Args:

input_data (RunAgentInput): Contains thread_id, run_id, messages, tools, and state

Returns:

StreamingResponse: Server-sent events stream with agent execution updates

"""

// ...

def main():

"""

Main function to start the FastAPI server.

This function:

1. Gets the port from environment variable PORT (defaults to 8000)

2. Starts the uvicorn ASGI server

3. Enables hot reload for development

"""

# Get port from environment variable, default to 8000

port = int(os.getenv("PORT", "8000"))

# Start the uvicorn server with the FastAPI app

uvicorn.run(

"main:app", # Reference to the FastAPI app instance

host="0.0.0.0", # Listen on all available interfaces

port=port, # Use the configured port

reload=True, # Enable auto-reload for development

)

# Entry point: run the server when script is executed directly

if __name__ == "__main__":

main()Step 3: Define an event generator

After creating a FastAPI endpoint, define an event generator that produces a stream of AG-UI protocol events, initialize the event encoder and return the streaming response to the client or the frontend, as shown in the agent/main.py file.

# FastAPI endpoint that handles agent execution requests

@app.post("/langgraph-agent")

async def langgraph_agent(input_data: RunAgentInput):

"""

Main endpoint that processes agent requests and streams back events.

Args:

input_data (RunAgentInput): Contains thread_id, run_id, messages, tools, and state

Returns:

StreamingResponse: Server-sent events stream with agent execution updates

"""

try:

# Define async generator function to produce server-sent events

async def event_generator():

# Step 1: Initialize event encoding and communication infrastructure

encoder = EventEncoder() # Converts events to SSE format

event_queue = asyncio.Queue() # Queue for events from agent execution

# Helper function to add events to the queue

def emit_event(event):

event_queue.put_nowait(event)

# Generate unique identifier for this message thread

message_id = str(uuid.uuid4())

// ...

except Exception as e:

# Log any errors that occur during execution

print(e)

# Return the event generator as a streaming response

return StreamingResponse(event_generator(), media_type="text/event-stream")</span>Step 4: Define AG-UI protocol lifecycle events

Once you have defined an event generator, define the AG-UI protocol lifecycle events that represent the lifecycle of an AG-UI + LangGraph agent run as shown in the agent/main.py file.

# FastAPI endpoint that handles agent execution requests

@app.post("/langgraph-agent")

async def langgraph_agent(input_data: RunAgentInput):

"""

Main endpoint that processes agent requests and streams back events.

Args:

input_data (RunAgentInput): Contains thread_id, run_id, messages, tools, and state

Returns:

StreamingResponse: Server-sent events stream with agent execution updates

"""

try:

# Define async generator function to produce server-sent events

async def event_generator():

// ...

# Step 2: Signal the start of agent execution

yield encoder.encode(

RunStartedEvent(

type=EventType.RUN_STARTED,

thread_id=input_data.thread_id,

run_id=input_data.run_id,

)

)

// ...

# Step 10: Signal the completion of the entire agent run

yield encoder.encode(

RunFinishedEvent(

type=EventType.RUN_FINISHED,

thread_id=input_data.thread_id,

run_id=input_data.run_id,

)

)

except Exception as e:

# Log any errors that occur during execution

print(e)

# Return the event generator as a streaming response

return StreamingResponse(event_generator(), media_type="text/event-stream")</span>Step 5: Define AG-UI protocol state management events and integrate LangGraph agent workflow

After defining AG-UI protocol lifecycle events, integrate AG-UI protocol state management events into your LangGraph agent nodes, as shown in the stock analysis agent chat node in the agent/stock_analysis.py file.

from ag_ui.core import StateDeltaEvent, EventType

// ...

async def chat_node(state: AgentState, config: RunnableConfig):

"""

First node in the workflow: Analyzes user input and extracts investment parameters.

This function:

1. Creates a tool log entry for UI feedback

2. Initializes the chat model (Gemini 2.5 Pro)

3. Converts state messages to LangChain format

4. Uses the LLM to extract structured data from user input

5. Handles retries if the model doesn't respond properly

6. Updates the conversation state with the response

Args:

state: Current agent state containing messages and context

config: Runtime configuration including event emitters

"""

try:

# Step 1: Create and emit a tool log entry for UI feedback

tool_log_id = str(uuid.uuid4())

state["tool_logs"].append({

"id": tool_log_id,

"message": "Analyzing user query",

"status": "processing"

})

config.get("configurable").get("emit_event")(

StateDeltaEvent(

type=EventType.STATE_DELTA,

delta=[{

"op": "add",

"path": "/tool_logs/-",

"value": {

"message": "Analyzing user query",

"status": "processing",

"id": tool_log_id

}

}]

)

)

await asyncio.sleep(0) # Yield control to allow UI updates

// ...

# Step 4: Attempt to get structured response from the model with retries

retry_counter = 0

while True:

// ...

# Step 5a: Handle successful tool call response

if response.tool_calls:

// ...

# Update tool log status to completed

index = len(state["tool_logs"]) - 1

config.get("configurable").get("emit_event")(

StateDeltaEvent(

type=EventType.STATE_DELTA,

delta=[{

"op": "replace",

"path": f"/tool_logs/{index}/status",

"value": "completed"

}]

)

)

await asyncio.sleep(0)

return # Success - exit the function

// ...

# Step 5c: Handle text response (no tool call)

else:

// ...

# Update tool log status to completed

index = len(state["tool_logs"]) - 1

config.get("configurable").get("emit_event")(

StateDeltaEvent(

type=EventType.STATE_DELTA,

delta=[{

"op": "replace",

"path": f"/tool_logs/{index}/status",

"value": "completed"

}]

)

)

await asyncio.sleep(0)

return # Success - exit the function

// ...

except Exception as e:

# Step 7: Handle any exceptions that occur during processing

print(e)

a_message = AssistantMessage(id=response.id, content="", role="assistant")

state["messages"].append(a_message)

return Command(

goto="end", # Skip to end node on error

)

# Step 8: Final cleanup - mark tool log as completed

index = len(state["tool_logs"]) - 1

config.get("configurable").get("emit_event")(

StateDeltaEvent(

type=EventType.STATE_DELTA,

delta=[{

"op": "replace",

"path": f"/tool_logs/{index}/status",

"value": "completed"

}]

)

)

await asyncio.sleep(0)

returnThen define other state management events and integrate your LangGraph agent workflow into the FastAPI endpoint, as shown in the agent/main.py file.

FastAPI endpoint that handles agent execution requests

@app.post("/langgraph-agent")

async def langgraph_agent(input_data: RunAgentInput):

"""

Main endpoint that processes agent requests and streams back events.

Args:

input_data (RunAgentInput): Contains thread_id, run_id, messages, tools, and state

Returns:

StreamingResponse: Server-sent events stream with agent execution updates

"""

try:

# Define async generator function to produce server-sent events

async def event_generator():

// ...

# Step 3: Send initial state snapshot to frontend

yield encoder.encode(

StateSnapshotEvent(

type=EventType.STATE_SNAPSHOT,

snapshot={

"available_cash": input_data.state["available_cash"],

"investment_summary": input_data.state["investment_summary"],

"investment_portfolio": input_data.state["investment_portfolio"],

"tool_logs": []

}

)

)

# Step 4: Initialize agent state with input data

state = AgentState(

tools=input_data.tools,

messages=input_data.messages,

be_stock_data=None, # Will be populated by agent tools

be_arguments=None, # Will be populated by agent tools

available_cash=input_data.state["available_cash"],

investment_portfolio=input_data.state["investment_portfolio"],

tool_logs=[]

)

# Step 5: Create and configure the LangGraph agent

agent = await agent_graph()

# Step 6: Start agent execution asynchronously

agent_task = asyncio.create_task(

agent.ainvoke(

state, config={"emit_event": emit_event, "message_id": message_id}

)

)

# Step 7: Stream events from agent execution as they occur

while True:

try:

# Wait for events with short timeout to check if agent is done

event = await asyncio.wait_for(event_queue.get(), timeout=0.1)

yield encoder.encode(event)

except asyncio.TimeoutError:

# Check if the agent execution has completed

if agent_task.done():

break

# Step 8: Clear tool logs after execution

yield encoder.encode(

StateDeltaEvent(

type=EventType.STATE_DELTA,

delta=[

{

"op": "replace",

"path": "/tool_logs",

"value": []

}

]

)

)

// ...

except Exception as e:

# Log any errors that occur during execution

print(e)

# Return the event generator as a streaming response

return StreamingResponse(event_generator(), media_type="text/event-stream")Step 6: Define AG-UI protocol tool call events to handle the human-in-the-loop breakpoint

To add human-in-the-loop breakpoint in your LangGraph agent, you need to append a tool call message with a tool call name to the state, as shown in the cash allocation node.

async def cash_allocation_node(state: AgentState, config: RunnableConfig):

"""

Third node in the workflow: Performs investment simulation and cash allocation.

This is the most complex node that handles:

1. Investment simulation (single-shot vs dollar-cost averaging)

2. Cash allocation and share purchasing logic

3. Portfolio performance calculation vs SPY benchmark

4. Investment logging and error handling

5. UI data preparation for charts and tables

Args:

state: Current agent state with stock data and investment parameters

config: Runtime configuration including event emitters

Returns:

Command: Directs workflow to the insights node

"""

// ...

# Step 16: Add assistant message with chart rendering tool call

state["messages"].append(

AssistantMessage(

role="assistant",

tool_calls=[

{

"id": str(uuid.uuid4()),

"type": "function",

"function": {

"name": "render_standard_charts_and_table",

"arguments": json.dumps(

{"investment_summary": state["investment_summary"]}

),

},

}

],

id=str(uuid.uuid4()),

)

)

# Step 17: Update tool log status to completed

index = len(state["tool_logs"]) - 1

config.get("configurable").get("emit_event")(

StateDeltaEvent(

type=EventType.STATE_DELTA,

delta=[

{

"op": "replace",

"path": f"/tool_logs/{index}/status",

"value": "completed"

}

],

)

)

await asyncio.sleep(0)

# Step 18: Direct workflow to the insights generation node

return Command(goto="ui_decision", update=state)Then define AG-UI protocol tool call events that an agent can use to trigger frontend actions by calling the frontend action using a tool name in order to request user feedback, as shown in the agent/main.py file.

FastAPI endpoint that handles agent execution requests

@app.post("/langgraph-agent")

async def langgraph_agent(input_data: RunAgentInput):

"""

Main endpoint that processes agent requests and streams back events.

Args:

input_data (RunAgentInput): Contains thread_id, run_id, messages, tools, and state

Returns:

StreamingResponse: Server-sent events stream with agent execution updates

"""

try:

# Define async generator function to produce server-sent events

async def event_generator():

// ...

# Step 9: Handle the final message from the agent

if state["messages"][-1].role == "assistant":

# Check if the assistant made tool calls

if state["messages"][-1].tool_calls:

# Step 9a: Stream tool call events if tools were used

# Signal the start of tool execution

yield encoder.encode(

ToolCallStartEvent(

type=EventType.TOOL_CALL_START,

tool_call_id=state["messages"][-1].tool_calls[0].id,

toolCallName=state["messages"][-1]

.tool_calls[0]

.function.name,

)

)

# Send the tool call arguments

yield encoder.encode(

ToolCallArgsEvent(

type=EventType.TOOL_CALL_ARGS,

tool_call_id=state["messages"][-1].tool_calls[0].id,

delta=state["messages"][-1]

.tool_calls[0]

.function.arguments,

)

)

# Signal the end of tool execution

yield encoder.encode(

ToolCallEndEvent(

type=EventType.TOOL_CALL_END,

tool_call_id=state["messages"][-1].tool_calls[0].id,

)

)

else:

// ...

except Exception as e:

# Log any errors that occur during execution

print(e)

# Return the event generator as a streaming response

return StreamingResponse(event_generator(), media_type="text/event-stream")Step 7: Define AG-UI protocol text message events

Once you define AG-UI protocol tool call events, define AG-UI protocol text message events in order to handle streaming agent responses to the frontend, as shown in the agent/main.py file.

FastAPI endpoint that handles agent execution requests

@app.post("/langgraph-agent")

async def langgraph_agent(input_data: RunAgentInput):

"""

Main endpoint that processes agent requests and streams back events.

Args:

input_data (RunAgentInput): Contains thread_id, run_id, messages, tools, and state

Returns:

StreamingResponse: Server-sent events stream with agent execution updates

"""

try:

# Define async generator function to produce server-sent events

async def event_generator():

// ...

# Step 9: Handle the final message from the agent

if state["messages"][-1].role == "assistant":

# Check if the assistant made tool calls

if state["messages"][-1].tool_calls:

// ...

else:

# Step 9b: Stream text message if no tools were used

# Signal the start of text message

yield encoder.encode(

TextMessageStartEvent(

type=EventType.TEXT_MESSAGE_START,

message_id=message_id,

role="assistant",

)

)

# Stream the message content in chunks for better UX

if state["messages"][-1].content:

content = state["messages"][-1].content

# Split content into 5 parts for gradual streaming

n_parts = 5

part_length = max(1, len(content) // n_parts)

parts = [content[i:i+part_length] for i in range(0, len(content), part_length)]

# Handle rounding by merging extra parts into the last one

if len(parts) > n_parts:

parts = parts[:n_parts-1] + [''.join(parts[n_parts-1:])]

# Stream each part with a delay for typing effect

for part in parts:

yield encoder.encode(

TextMessageContentEvent(

type=EventType.TEXT_MESSAGE_CONTENT,

message_id=message_id,

delta=part,

)

)

await asyncio.sleep(0.5) # 500ms delay between chunks

else:

# Send error message if content is empty

yield encoder.encode(

TextMessageContentEvent(

type=EventType.TEXT_MESSAGE_CONTENT,

message_id=message_id,

delta="Something went wrong! Please try again.",

)

)

# Signal the end of text message

yield encoder.encode(

TextMessageEndEvent(

type=EventType.TEXT_MESSAGE_END,

message_id=message_id,

)

)

// ...

except Exception as e:

# Log any errors that occur during execution

print(e)

# Return the event generator as a streaming response

return StreamingResponse(event_generator(), media_type="text/event-stream")Congratulations! You have integrated a LangGraph agent with AG-UI protocol. Let’s now see how to add a frontend to the AG-UI + LangGraph agent.

Integrating a frontend to the AG-UI + LangGraph agent using CopilotKit

In this section, you will learn how to create a connection between your AG-UI + LangGraph agent and a frontend using CopilotKit.

Let’s get started.

First, navigate to the frontend directory:

cd frontendNext create a .env file with Google Gemini API Key API key:

GOOGLE_API_KEY=your-gemini-key-hereThen install the dependencies:

pnpm installAfter that, start the development server:

pnpm run devNavigate to http://localhost:3000, and you should see the AG-UI + LangGraph agent frontend up and running.

Let’s now see how to build the frontend UI for the AG-UI + LangGraph agent using CopilotKit.

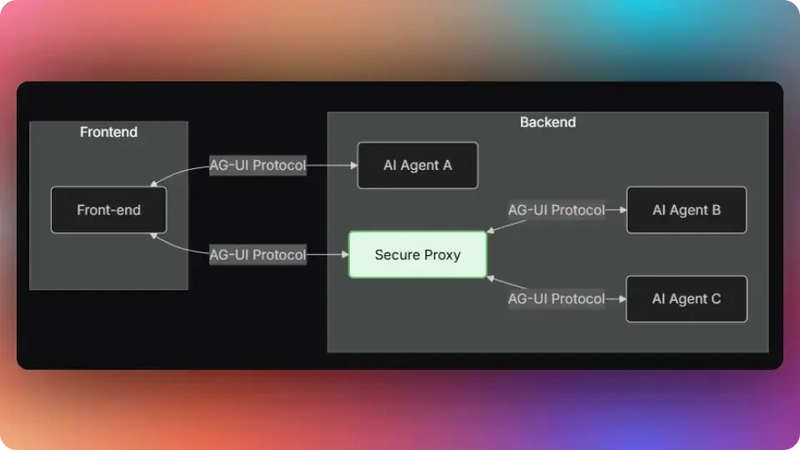

Step 1: Create an HttpAgent instance

HttpAgent is a client from the AG-UI Library that bridges your frontend application with any AG-UI-compatible AI agent’s server.

To create an HttpAgent instance, define it in an API route as shown in the src/app/api/copilotkit/route.ts file.

// Import the HttpAgent for making HTTP requests to the backend

import { HttpAgent } from "@ag-ui/client";

// Import CopilotKit runtime components for setting up the API endpoint

import {

CopilotRuntime,

ExperimentalEmptyAdapter,

copilotRuntimeNextJSAppRouterEndpoint,

GoogleGenerativeAIAdapter,

} from "@copilotkit/runtime";

// Import NextRequest type for handling Next.js API requests

import { NextRequest } from "next/server";

const serviceAdapter = new GoogleGenerativeAIAdapter();

// Create a new HttpAgent instance that connects to the LangGraph stock backend running locally

const stockAgent = new HttpAgent({

url: "http://127.0.0.1:8000/langgraph-agent",

});

// Initialize the CopilotKit runtime with our stock agent

const runtime = new CopilotRuntime({

agents: {

stockAgent, // Register the stock agent with the runtime

},

});

/**

* Define the POST handler for the API endpoint

* This function handles incoming POST requests to the /api/copilotkit endpoint

*/

export const POST = async (req: NextRequest) => {

// Configure the CopilotKit endpoint for the Next.js app router

const { handleRequest } = copilotRuntimeNextJSAppRouterEndpoint({

runtime, // Use the runtime with our research agent

serviceAdapter,

endpoint: "/api/copilotkit", // Define the API endpoint path

});

// Process the incoming request with the CopilotKit handler

return handleRequest(req);

};Step 2: Set up CopilotKit provider

To set up the CopilotKit Provider, the <CopilotKit> component must wrap the Copilot-aware parts of your application.

For most use cases, it's appropriate to wrap the CopilotKit provider around the entire app, e.g., in your layout.tsx, as shown below in the src/app/layout.tsx file.

// Next.js imports for metadata and font handling

import type { Metadata } from "next";

import { Geist, Geist_Mono } from "next/font/google";

// Global styles for the application

import "./globals.css";

// CopilotKit UI styles for AI components

import "@copilotkit/react-ui/styles.css";

// CopilotKit core component for AI functionality

import { CopilotKit } from "@copilotkit/react-core";

// Configure Geist Sans font with CSS variables for consistent typography

const geistSans = Geist({

variable: "--font-geist-sans",

subsets: ["latin"],

});

// Configure Geist Mono font for code and monospace text

const geistMono = Geist_Mono({

variable: "--font-geist-mono",

subsets: ["latin"],

});

// Metadata configuration for SEO and page information

export const metadata: Metadata = {

title: "AI Stock Portfolio",

description: "AI Stock Portfolio",

};

// Root layout component that wraps all pages in the application

export default function RootLayout({

children,

}: Readonly<{

children: React.ReactNode;

}>) {

return (

<html lang="en">

<body

className={`${geistSans.variable} ${geistMono.variable} antialiased`}>

{/* CopilotKit wrapper that enables AI functionality throughout the app */}

{/* runtimeUrl points to the API endpoint for AI backend communication */}

{/* agent specifies which AI agent to use (stockAgent for stock analysis) */}

<CopilotKit runtimeUrl="/api/copilotkit" agent="stockAgent">

{children}

</CopilotKit>

</body>

</html>

);

}Step 3: Set up a Copilot chat component

CopilotKit ships with a number of built-in chat components which include CopilotPopup, CopilotSidebar, and CopilotChat.

To set up a Copilot chat component, define it as shown in the src/app/components/prompt-panel.tsx file.

// Client-side component directive for Next.js

"use client";

import type React from "react";

// CopilotKit chat component for AI interactions

import { CopilotChat } from "@copilotkit/react-ui";

// Props interface for the PromptPanel component

interface PromptPanelProps {

// Amount of available cash for investment, displayed in the panel

availableCash: number;

}

// Main component for the AI chat interface panel

export function PromptPanel({ availableCash }: PromptPanelProps) {

// Utility function to format numbers as USD currency

// Removes decimal places for cleaner display of large amounts

const formatCurrency = (amount: number) => {

return new Intl.NumberFormat("en-US", {

style: "currency",

currency: "USD",

minimumFractionDigits: 0,

maximumFractionDigits: 0,

}).format(amount);

};

return (

<div className="h-full flex flex-col bg-white">

{/* Header section with title, description, and cash display */}

<div className="p-4 border-b border-[#D8D8E5] bg-[#FAFCFA]">

{/* Title section with icon and branding */}

<div className="flex items-center gap-2 mb-2">

<span className="text-xl">🪁</span>

<div>

<h1 className="text-lg font-semibold text-[#030507] font-['Roobert']">

Portfolio Chat

</h1>

{/* Pro badge indicator */}

<div className="inline-block px-2 py-0.5 bg-[#BEC9FF] text-[#030507] text-xs font-semibold uppercase rounded">

PRO

</div>

</div>

</div>

{/* Description of the AI agent's capabilities */}

<p className="text-xs text-[#575758]">

Interact with the LangGraph-powered AI agent for portfolio

visualization and analysis

</p>

{/* Available Cash Display section */}

<div className="mt-3 p-2 bg-[#86ECE4]/10 rounded-lg">

<div className="text-xs text-[#575758] font-medium">

Available Cash

</div>

<div className="text-sm font-semibold text-[#030507] font-['Roobert']">

{formatCurrency(availableCash)}

</div>

</div>

</div>

{/* CopilotKit chat interface with custom styling and initial message */}

{/* Takes up majority of the panel height for conversation */}

<CopilotChat

className="h-[78vh] p-2"

labels={{

// Initial welcome message explaining the AI agent's capabilities and limitations

initial: `I am a LangGraph AI agent designed to analyze investment opportunities and track stock performance over time. How can I help you with your investment query? For example, you can ask me to analyze a stock like "Invest in Apple with 10k dollars since Jan 2023". \n\nNote: The AI agent has access to stock data from the past 4 years only`,

}}

/>

</div>

);

}Step 4: Sync AG-UI + LangGraph agent state with the frontend using CopilotKit hooks

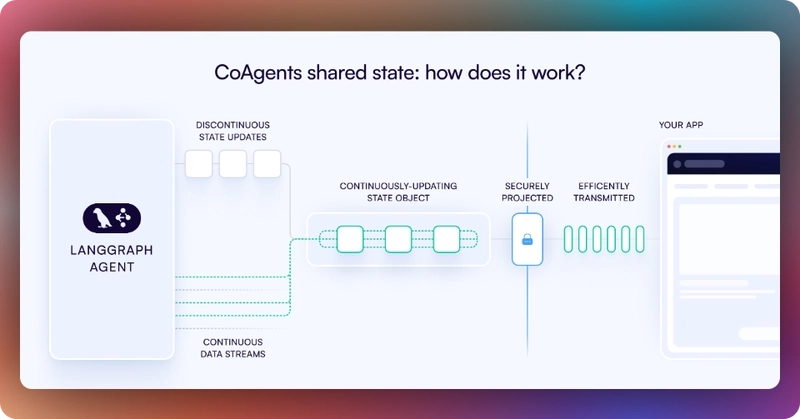

In CopilotKit, CoAgents maintain a shared state that seamlessly connects your frontend UI with the agent's execution. This shared state system allows you to:

- Display the agent's current progress and intermediate results

- Update the agent's state through UI interactions

- React to state changes in real-time across your application

You can learn more about CoAgents’ shared state here on the CopilotKit docs.

To sync your AG-UI + LangGraph agent state with the frontend, use the CopilotKit useCoAgent hook to share the AG-UI + LangGraph agent state with your frontend, as shown in the src/app/page.tsx file.

"use client";

import {

useCoAgent,

} from "@copilotkit/react-core";

// ...

export interface SandBoxPortfolioState {

performanceData: Array<{

date: string;

portfolio: number;

spy: number;

}>;

}

export interface InvestmentPortfolio {

ticker: string;

amount: number;

}

export default function OpenStocksCanvas() {

// ...

const [totalCash, setTotalCash] = useState(1000000);

const { state, setState } = useCoAgent({

name: "stockAgent",

initialState: {

available_cash: totalCash,

investment_summary: {} as any,

investment_portfolio: [] as InvestmentPortfolio[],

},

});

// ...

return (

<div className="h-screen bg-[#FAFCFA] flex overflow-hidden">

{/* ... */}

</div>

);

}Then render the AG-UI + LangGraph agent's state in the chat UI which is useful for informing the user about the agent's state in a more in-context way.

To render the AG-UI + LangGraph agent's state in the chat UI, you can use the useCoAgentStateRender hook, as shown in the src/app/page.tsx file.

"use client";

import {

useCoAgentStateRender,

} from "@copilotkit/react-core";

import { ToolLogs } from "./components/tool-logs";

// ...

export default function OpenStocksCanvas() {

// ...

useCoAgentStateRender({

name: "stockAgent",

render: ({ state }) => <ToolLogs logs={state.tool_logs} />,

});

// ...

return (

<div className="h-screen bg-[#FAFCFA] flex overflow-hidden">

{/* ... */}

</div>

);

}If your execute a query in the chat, you should see the AG-UI + LangGraph agent’s state task execution rendered in the chat UI, as shown below.

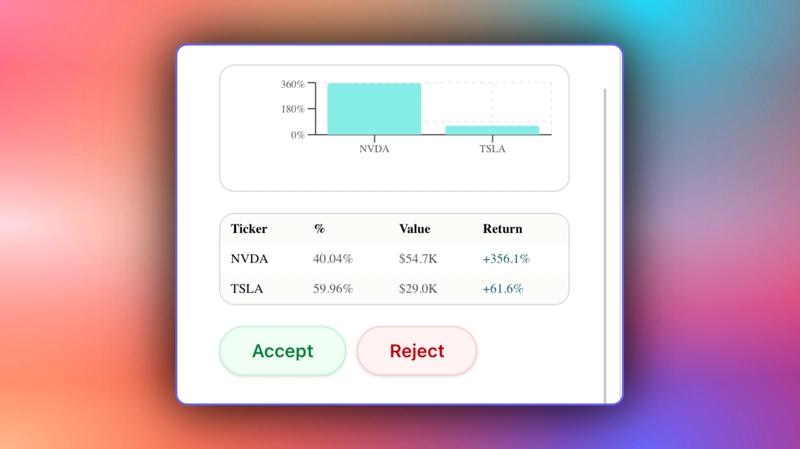

Step 5: Implementing Human-in-the-Loop (HITL) in the frontend

Human-in-the-loop (HITL) allows agents to request human input or approval during execution, making AI systems more reliable and trustworthy. This pattern is essential when building AI applications that need to handle complex decisions or actions that require human judgment.

You can learn more about Human in the Loop here on CopilotKit docs.

To implement Human-in-the-Loop (HITL) in the frontend, you need to use the CopilotKit useCopilotKitAction hook with the renderAndWaitForResponse method which allows returning values asynchronously from the render function, as shown in the src/app/page.tsx file.

"use client";

import {

useCopilotAction,

} from "@copilotkit/react-core";

// ...

export default function OpenStocksCanvas() {

// ...

useCopilotAction({

name: "render_standard_charts_and_table",

description:

"This is an action to render a standard chart and table. The chart can be a bar chart or a line chart. The table can be a table of data.",

renderAndWaitForResponse: ({ args, respond, status }) => {

useEffect(() => {

console.log(args, "argsargsargsargsargsaaa");

}, [args]);

return (

<>

{args?.investment_summary?.percent_allocation_per_stock &&

args?.investment_summary?.percent_return_per_stock &&

args?.investment_summary?.performanceData && (

<>

<div className="flex flex-col gap-4">

<LineChartComponent

data={args?.investment_summary?.performanceData}

size="small"

/>

<BarChartComponent

data={Object.entries(

args?.investment_summary?.percent_return_per_stock

).map(([ticker, return1]) => ({

ticker,

return: return1 as number,

}))}

size="small"

/>

<AllocationTableComponent

allocations={Object.entries(

args?.investment_summary?.percent_allocation_per_stock

).map(([ticker, allocation]) => ({

ticker,

allocation: allocation as number,

currentValue:

args?.investment_summary.final_prices[ticker] *

args?.investment_summary.holdings[ticker],

totalReturn:

args?.investment_summary.percent_return_per_stock[

ticker

],

}))}

size="small"

/>

</div>

<button

hidden={status == "complete"}

className="mt-4 rounded-full px-6 py-2 bg-green-50 text-green-700 border border-green-200 shadow-sm hover:bg-green-100 transition-colors font-semibold text-sm"

onClick={() => {

debugger;

if (respond) {

setTotalCash(args?.investment_summary?.cash);

setCurrentState({

...currentState,

returnsData: Object.entries(

args?.investment_summary?.percent_return_per_stock

).map(([ticker, return1]) => ({

ticker,

return: return1 as number,

})),

allocations: Object.entries(

args?.investment_summary?.percent_allocation_per_stock

).map(([ticker, allocation]) => ({

ticker,

allocation: allocation as number,

currentValue:

args?.investment_summary?.final_prices[ticker] *

args?.investment_summary?.holdings[ticker],

totalReturn:

args?.investment_summary?.percent_return_per_stock[

ticker

],

})),

performanceData:

args?.investment_summary?.performanceData,

bullInsights: args?.insights?.bullInsights || [],

bearInsights: args?.insights?.bearInsights || [],

currentPortfolioValue:

args?.investment_summary?.total_value,

totalReturns: (

Object.values(

args?.investment_summary?.returns

) as number[]

).reduce((acc, val) => acc + val, 0),

});

setInvestedAmount(

(

Object.values(

args?.investment_summary?.total_invested_per_stock

) as number[]

).reduce((acc, val) => acc + val, 0)

);

setState({

...state,

available_cash: totalCash,

});

respond(

"Data rendered successfully. Provide summary of the investments by not making any tool calls"

);

}

}}>

Accept

</button>

<button

hidden={status == "complete"}

className="rounded-full px-6 py-2 bg-red-50 text-red-700 border border-red-200 shadow-sm hover:bg-red-100 transition-colors font-semibold text-sm ml-2"

onClick={() => {

debugger;

if (respond) {

respond(

"Data rendering rejected. Just give a summary of the rejected investments by not making any tool calls"

);

}

}}>

Reject

</button>

</>

)}

</>

);

},

});

// ...

return (

<div className="h-screen bg-[#FAFCFA] flex overflow-hidden">

{/* ... */}

</div>

);

}When an agent triggers frontend actions by tool/action name to request human input or feedback during execution, the end-user is prompted with a choice (rendered inside the chat UI). Then the user can choose by pressing a button in the chat UI, as shown below.

Step 6: Streaming AG-UI + LangGraph agent responses in the frontend

To stream your AG-UI + LangGraph agent responses or results in the frontend, pass the agent’s state field values to the frontend components, as shown in the src/app/page.tsx file.

"use client";

import { useEffect, useState } from "react";

import { PromptPanel } from "./components/prompt-panel";

import { GenerativeCanvas } from "./components/generative-canvas";

import { ComponentTree } from "./components/component-tree";

import { CashPanel } from "./components/cash-panel";

// ...

export default function OpenStocksCanvas() {

const [currentState, setCurrentState] = useState<PortfolioState>({

id: "",

trigger: "",

performanceData: [],

allocations: [],

returnsData: [],

bullInsights: [],

bearInsights: [],

currentPortfolioValue: 0,

totalReturns: 0,

});

const [sandBoxPortfolio, setSandBoxPortfolio] = useState<SandBoxPortfolioState[]>([]);

const [selectedStock, setSelectedStock] = useState<string | null>(null);

return (

<div className="h-screen bg-[#FAFCFA] flex overflow-hidden">

{/* Left Panel - Prompt Input */}

<div className="w-85 border-r border-[#D8D8E5] bg-white flex-shrink-0">

<PromptPanel availableCash={totalCash} />

</div>

{/* Center Panel - Generative Canvas */}

<div className="flex-1 relative min-w-0">

{/* Top Bar with Cash Info */}

<div className="absolute top-0 left-0 right-0 bg-white border-b border-[#D8D8E5] p-4 z-10">

<CashPanel

totalCash={totalCash}

investedAmount={investedAmount}

currentPortfolioValue={

totalCash + investedAmount + currentState.totalReturns || 0

}

onTotalCashChange={setTotalCash}

onStateCashChange={setState}

/>

</div>

<div className="pt-20 h-full">

<GenerativeCanvas

setSelectedStock={setSelectedStock}

portfolioState={currentState}

sandBoxPortfolio={sandBoxPortfolio}

setSandBoxPortfolio={setSandBoxPortfolio}

/>

</div>

</div>

{/* Right Panel - Component Tree (Optional) */}

{showComponentTree && (

<div className="w-64 border-l border-[#D8D8E5] bg-white flex-shrink-0">

<ComponentTree portfolioState={currentState} />

</div>

)}

</div>

);

}If you query your agent and approve its feedback request, you should see the agent’s response or results streaming in the UI, as shown below.

Conclusion

In this guide, we have walked through the steps of integrating LangGraph agents with AG-UI protocol and then adding a frontend to the agents using CopilotKit.

While we’ve explored a couple of features, we have barely scratched the surface of the countless use cases for CopilotKit, ranging from building interactive AI chatbots to building agentic solutions—in essence, CopilotKit lets you add a ton of useful AI capabilities to your products in minutes.

Hopefully, this guide makes it easier for you to integrate AI-powered Copilots into your existing application.

Follow CopilotKit on Twitter and say hi, and if you'd like to build something cool, join the Discord community.