How CopilotKit + Mastra Enable Real-Time Agent Interaction

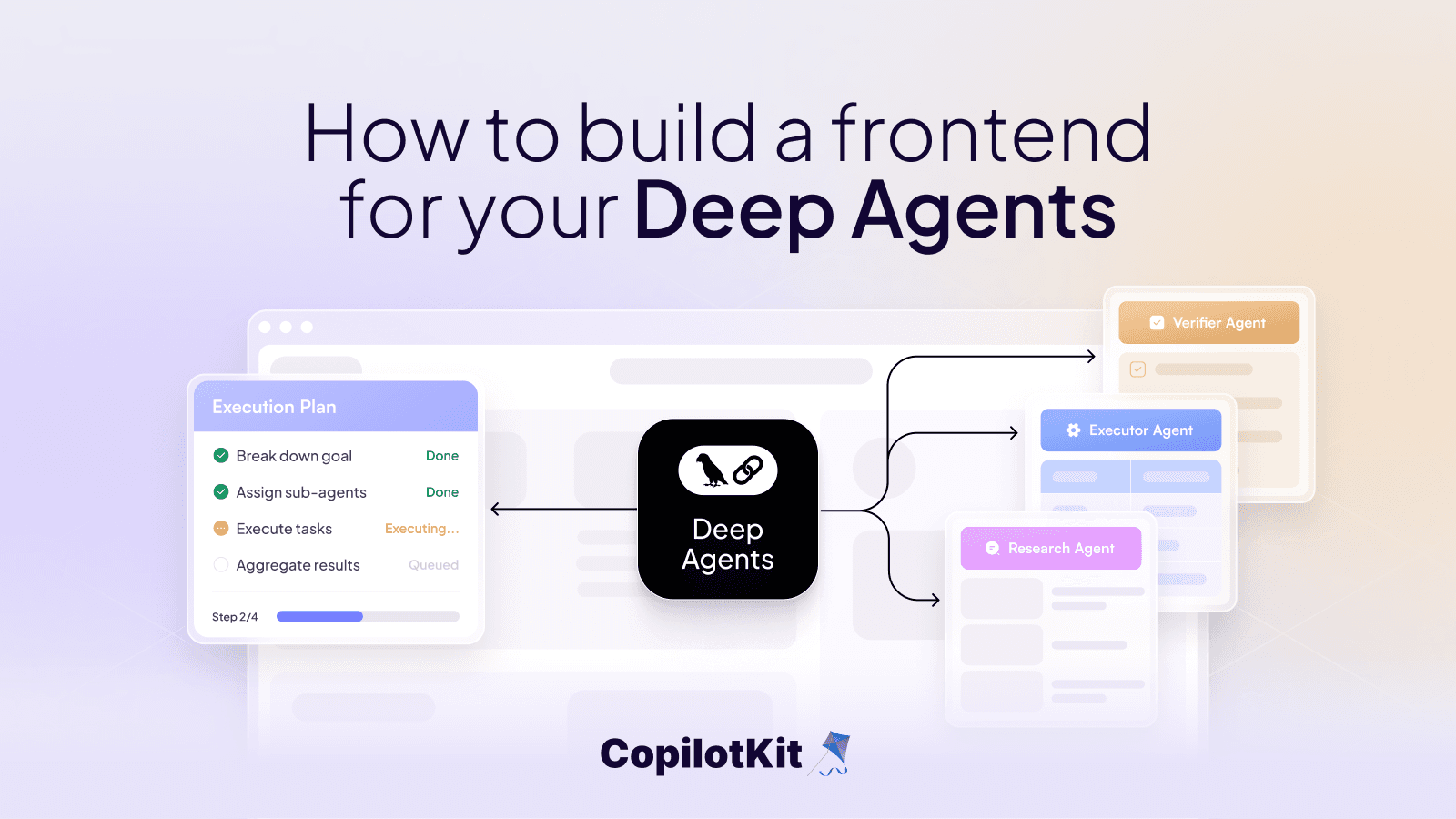

One of the strongest use cases for copilots is co-creation-working side by side with a user to draft, edit, or build something interactively. The other major use case we see talking to developers, and even large teams are, workflow copilots, which is really just a series of co-creation steps chained together.

Together, these two patterns represent the majority of what developers are building with agents today.

That’s why we we built the AG-UI Canvas Template, powered by CopilotKit + Mastra.

We'll get back to the Canvas but let's talk about agentic apps and where you should start.

Mastra, The TypeScript Agent Framework

Mastra is an open-source agent framework with an agentic backend.

It gives developers the building blocks to go from simple LLM calls to full agent workflows. Some of the core capabilities include:

- Multi-LLM support: Easily switch between providers like OpenAI, Anthropic, Gemini, and local models. Stream responses with type-safe APIs.

- Agents with tools: Extend agents with typed functions and external API integrations. Agents can reason, then call into your systems to get work done.

- Workflows: Durable, graph-based workflows with branching, looping, and error handling. Workflows persist state across steps and can even pause for human input.

- RAG support: Out-of-the-box chunking, embedding, and vector search for retrieval-augmented generation.

- Observability: Built-in OpenTelemetry tracing for debugging and monitoring agents in production.

- Evaluations: Automated evals (model-graded, rule-based, statistical) so you can test and trust your agent outputs.

This makes Mastra a strong foundation for reasoning, execution, and orchestration at scale.

But reasoning is only half the story. For agents to feel useful to end users, they need a way to collaborate in real time, inside the product itself.

The UI Layer-Where CopilotKit Comes In

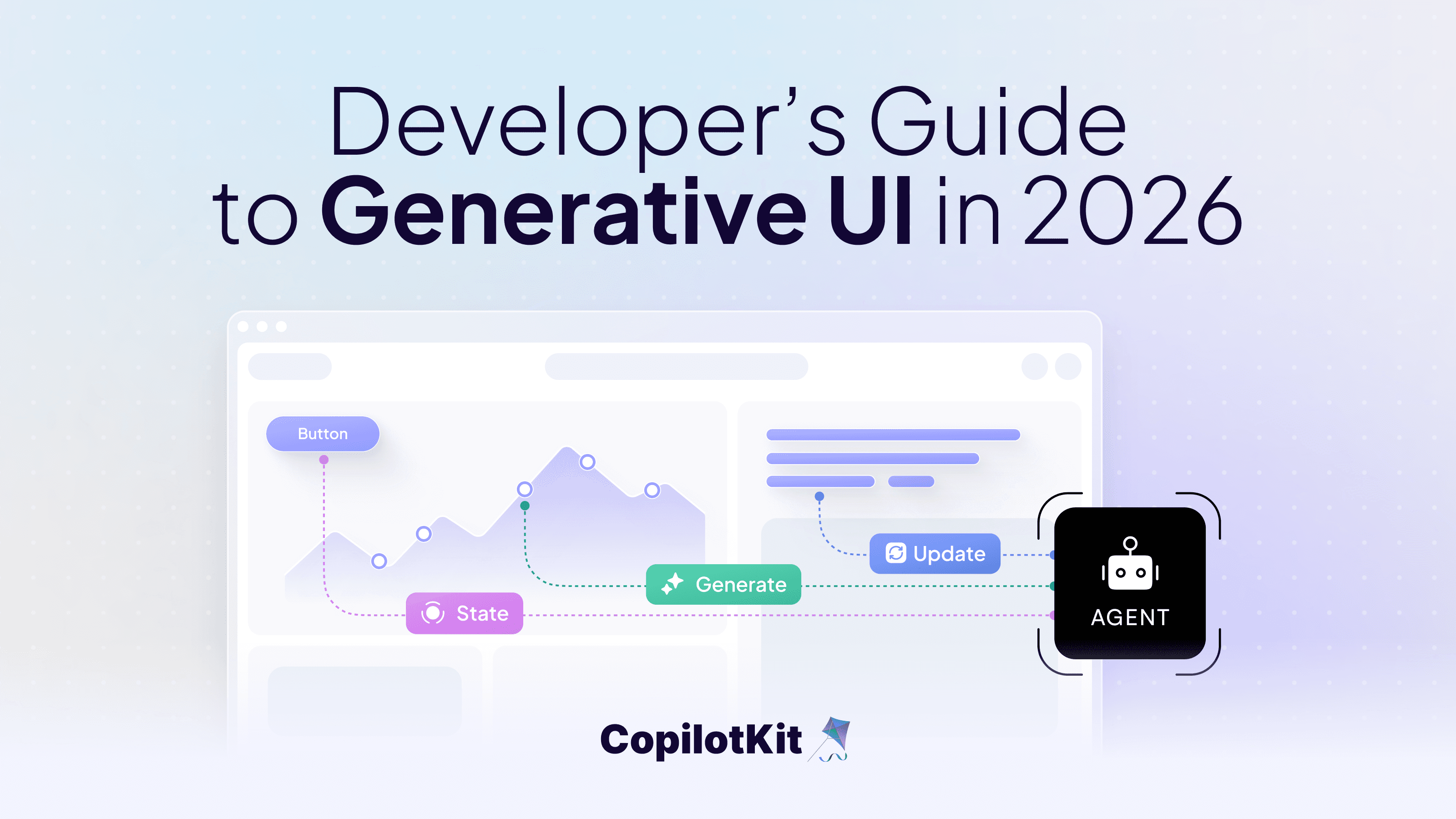

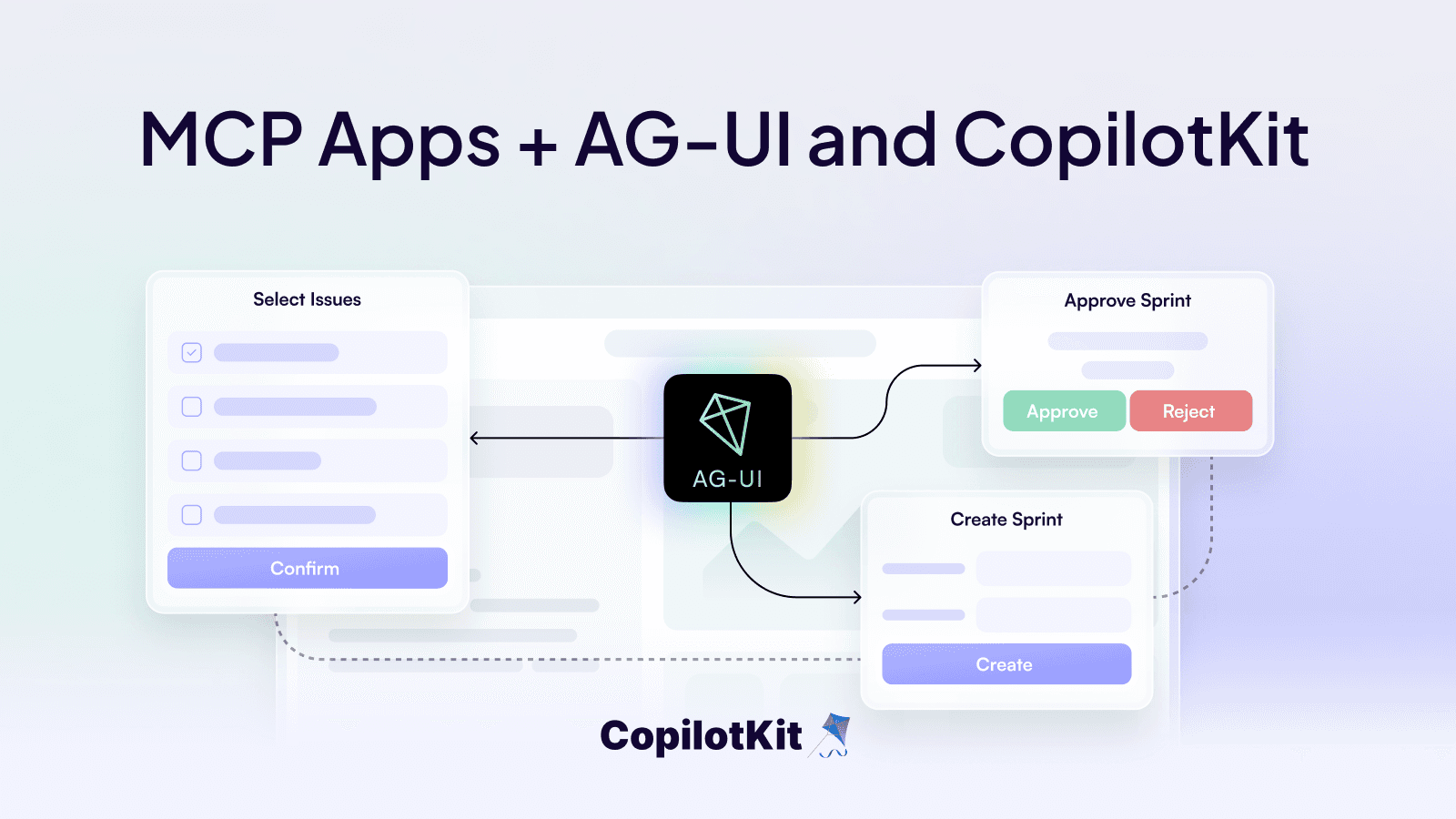

CopilotKit provides the AG-UI (Agent–User Interaction protocol), a standard way to bring backend agents into the front end.

Instead of showing Mastra’s outputs in a static chat bubble, CopilotKit renders them as interactive React components: editable, clickable, and collaborative. Users can guide, correct, and co-create with the agent, all inside the app.

Getting Started in Under 2 Minutes

The fastest way to try Mastra + CopilotKit together is with our starter template.

Just run:

This scaffolds a full example app- Mastra agents on the backend, CopilotKit + AG-UI on the frontend in less than two minutes.

You’ll have an interactive agent running locally with no extra setup.

Developers can now pair Mastra’s robust agent framework with CopilotKit’s interaction layer to ship agents that feel natural to work with.

Zero to AG-UI Canvas Template

The Canvas template shows this in action: a Mastra agent reasoning in the background while CopilotKit streams updates to the frontend, letting the user interact, adjust, and co-create continuously.

This template alone covers two-thirds of the most common copilot use cases-and it’s the fastest way to see how Mastra + CopilotKit fit together in practice.

Check it out on GitHub:https://go.copilotkit.ai/ag-ui-canvas-mastra

Start building your first interactive Mastra-powered agentic app today!

Want to learn more?

- Book a call and connect with our team

- Please tell us who you are --> what you're building, --> company size in the meeting description

Happy Building!