The Best AI Agent Resources You Should Know in 2025

Most AI Agents used to break the moment you tried plugging them into a real app. The issue was the lack of shared protocols.

But that’s changing fast. Over the past year, protocols like AG-UI (user interaction), A2A (agent-to-agent communication), and MCP (for tool access) have changed the ecosystem.

So I took the chance to learn these protocols and understand how everything fits together. This post covers everything I picked up about protocols (AG-UI, ACP, A2A, MCP).

You will also find a collection of the best educational repos for learning & building AI Agents.

This list will give you the foundations and tools you need.

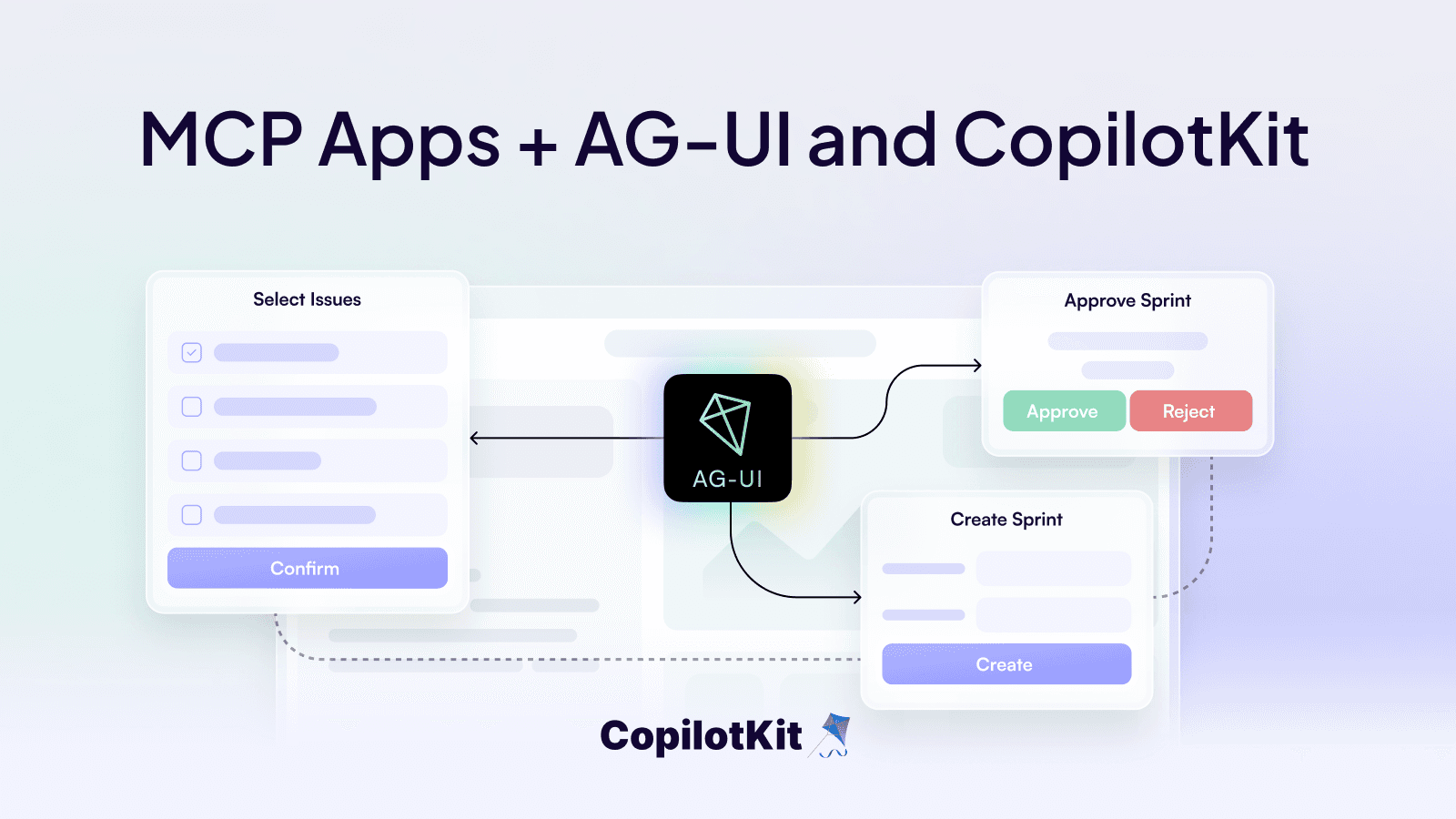

1. AG-UI - the Agent-User Interaction Protocol.

There are solid multi-step agent frameworks out there. But the moment you try to plug an agent into a real world app, things start to break:

- Streaming responses token-by-token without custom WebSocket servers

- Showing live tool progress, pause for human feedback, without losing context

- Keeping large shared state (code, tables, data) in sync without re-sending everything to the UI

- Letting users interrupt, cancel, or reply to agents in the middle of a task (without losing state)

Most agent backends (LangGraph, CrewAI, etc.) have their own stream formats, state logic, and tool-call APIs. So you need to rewrite everything if you switch stacks (which is not scalable).

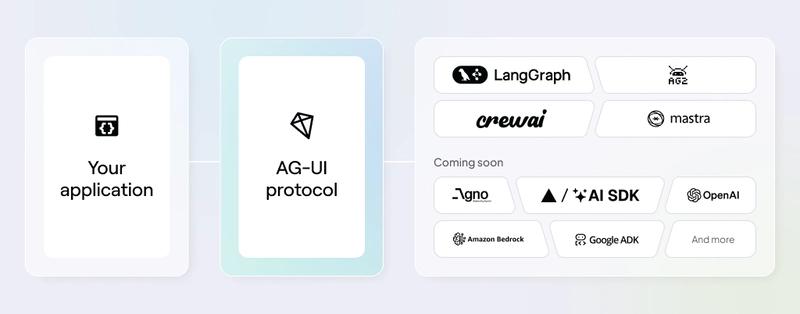

✅ Solution: AG-UI

AG-UI is an open source protocol by CopilotKit that bridges this gap. It makes it dead simple for builders to bring compatible Agents into Frontend clients.

It uses Server-Sent Events (SSE) to stream structured JSON events from the agent backend to the frontend.

There are 16 event types. Each has a clearly defined payload like:

TEXT_MESSAGE_CONTENTfor token streaming.TOOL_CALL_STARTto show tool execution.STATE_DELTAto update shared state (code, data, etc.)AGENT_HANDOFFto smoothly pass control between agents

You can create a new AG-UI application using the following command.

npx create-ag-ui-app@latest <my agent framework>The adoption of frameworks is moving fast with LangGraph, CrewAI, Mastra, LlamaIndex, and Agno already supported.

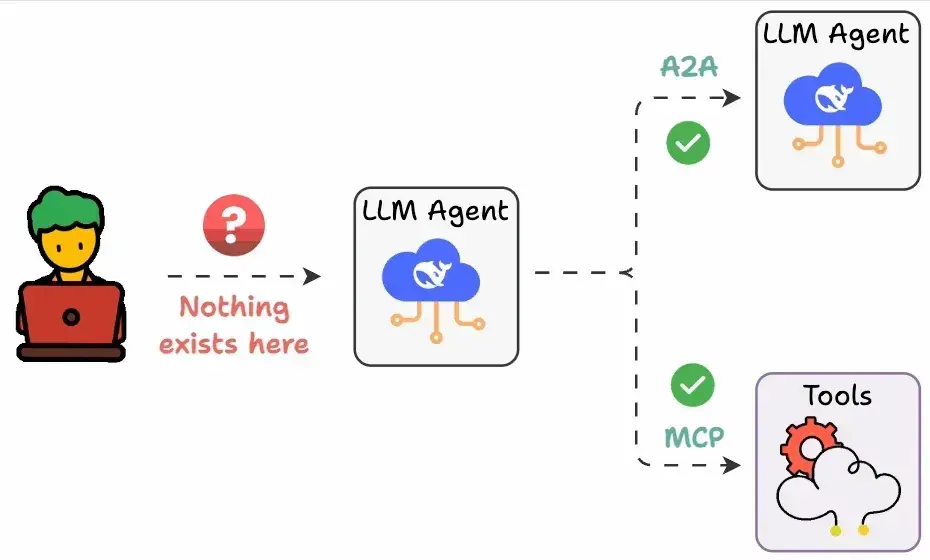

🎯 Where does AG-UI fit in the agentic protocol stack?

AG-UI is complementary to the other 2 top agentic protocols:

- MCP connects agents to tools

- A2A connects agents to each other

- AG-UI connects agents to the end user

AG‑UI acts like the REST layer of human-agent interaction with nearly zero boilerplate and is easy to integrate into any tech stack.

2. A2A - Agent ↔ Agent Interaction Protocol.

Agent systems are becoming more capable, but they don’t have a shared language.

If Agent A wants to ask Agent B to help with a task, it requires:

- Writing custom APIs

- Hardcoding endpoints

- Creating adapters for every new agent pair

So agents built on different frameworks (LangGraph, CrewAI...) end up in silos, unable to coordinate.

A2A (Agent-to-Agent) is a protocol from Google that solves this using a JSON-RPC & SSE standard. With A2A, agents can:

- Discover each other's capabilities.

- Decide how to communicate (text, forms, media)

- Securely collaborate on long running tasks.

- Operate without exposing their internal state, memory, or tools.

To get started, use the Python SDK or JS SDK.

pip install a2a-sdk

or

npm install @a2a-js/sdk🧠 How A2A Works (Flow)

1) Agents publish a JSON Agent Card (capabilities, endpoint, auth). Others fetch it to know "who to call and how".

2) Delegating Tasks via JSON-RPC

- Client agent uses

tasks/sendorsendSubscribeto initiate a task - Calls are made over HTTP with structured inputs

- Remote agents respond with task IDs and begin execution

1) Progress and intermediate artifacts stream via SSE (TaskStatusUpdate, TaskArtifactUpdate).

2) Interactive Mid-Task Input

- Remote agents can pause and emit

input-required - The calling agent responds with additional input via the same task ID

5) Agents exchange text, data, files (such as audio/video) via structured “Parts” and “Artifacts”.

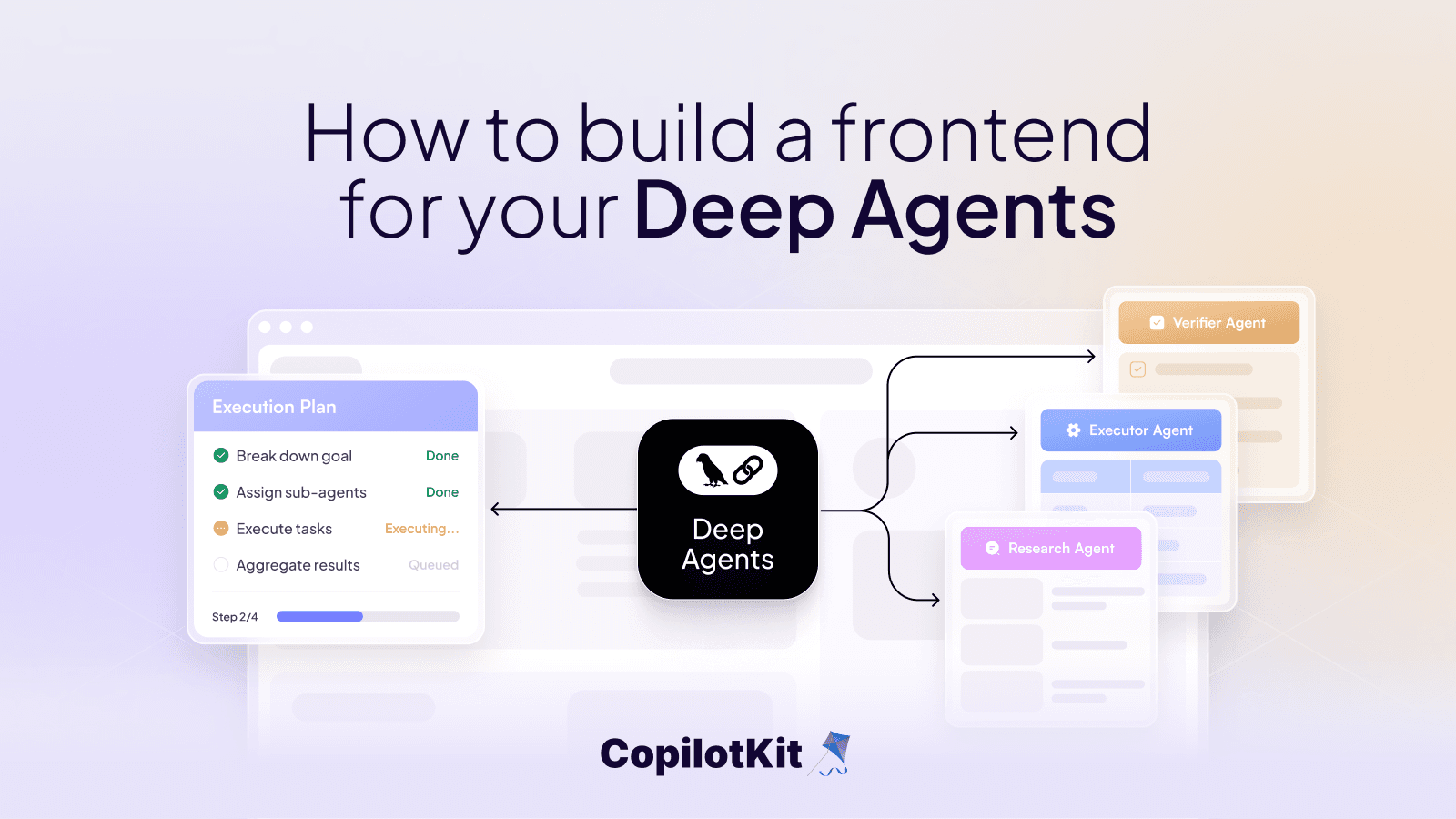

✅ Example: End-to-End Agent Collab

Here is a very simple example:

- A user gives Agent A a complex task

- Agent A breaks it into subtasks

- It finds Agents B, C, D using their Agent Cards

- It delegates work using A2A calls

- Those agents run in parallel, stream updates, and request extra input

- Agent A gathers and merges the results

And that's how agents talk to each other.

While it's still new, it's good to standardize Agent-to-Agent collaboration, similar to how MCP does for Agent-to-tool interaction.

MCP extends what a single agent can do. A2A expands how agents can collaborate, being agnostic to the backend/framework.

GIFCredit: dailydoseofds.com

If you are interested in reading more, check out MCP vs A2A by Auth0.

3. MCP - Model Context Protocol.

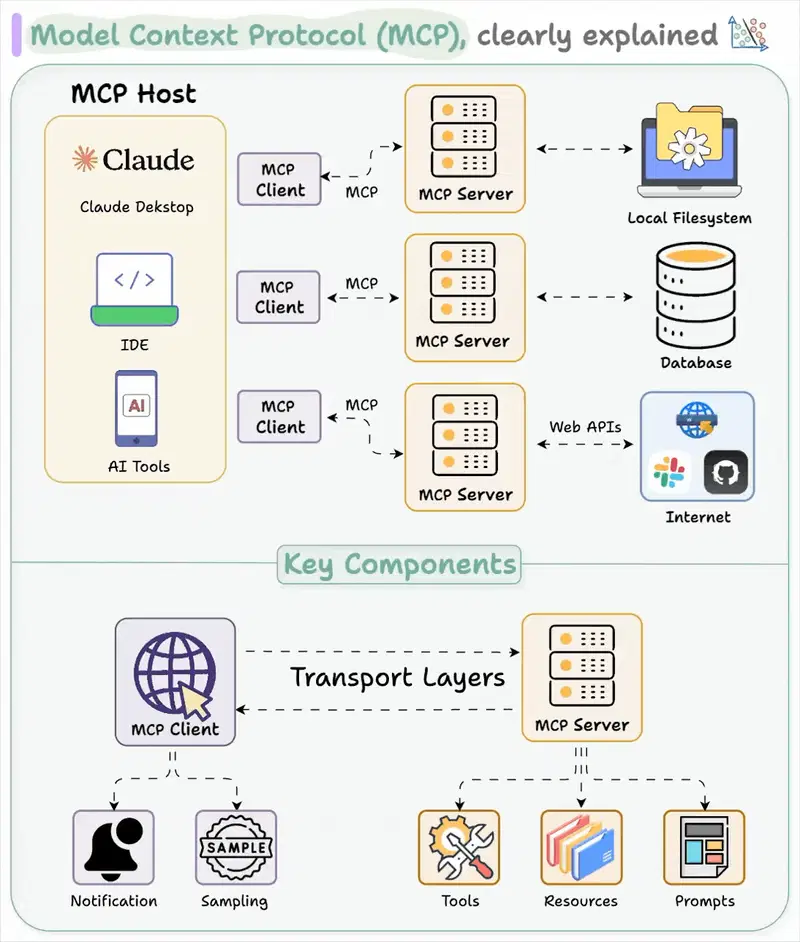

MCP (Model Context Protocol) is Anthropic's attempt at standardizing how applications provide context and tools to LLMs.

Like USB‑C for hardware, MCP is a universal interface for AI models to “plug in” to data sources and tools.

Instead of writing custom wrappers for every service (GitHub, Slack, files, DBs), you expose tools using MCP so it can:

- List available tools (

tools/list) - Call a tool (

tools/call) - Get back structured, typed results

This mimics function-calling APIs but works across platforms and services.

The spec was fairly minimal before (using JSON-RPC over stdio or HTTP). Authentication wasn’t clearly defined, which is why many implementations skipped it altogether.

Now that MCP adoption is growing, Anthropic has made major improvements (especially in security) with their new new Spec updates (MCP v2025-06-18).

Credit: dailydoseofds.com

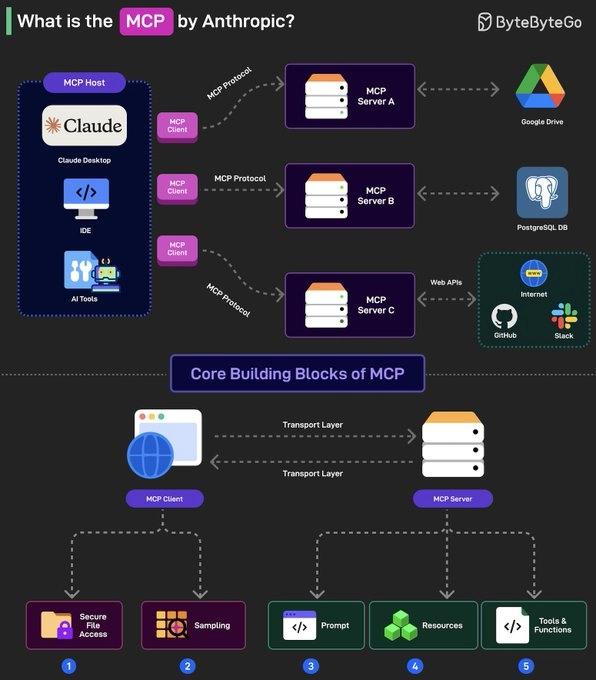

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers. Here's how the components fit together:

MCP hosts- apps like Claude Desktop, Cursor, Windsurf, or AI tools that want to access data via MCP.MCP Clients- protocol clients that maintain 1:1 connections with MCP servers, acting as the communication bridge.MCP Servers- lightweight programs that each expose specific capabilities (like reading files, querying databases...) through the standardized Model Context Protocol.Local Data Sources- files, databases, and services on your computer that MCP servers can securely access. For instance, a browser automation MCP server needs access to your browser to work.Remote Services- External APIs and cloud-based systems that MCP servers can connect to.

Credit: ByteByteGo3

If you are interested in reading more, here are a couple of good reads:

- The guide to MCP I never had on Medium

- What is the Model Context Protocol (MCP)? by the Builder.io team

4. ACP - REST/stream-based Agent Communication Protocol.

ACP (Agent Communication Protocol) builds on many of A2A's ideas but takes them a step further.

It's an open protocol for communication between AI agents, applications, and humans. It works over a standardized RESTful API and supports:

- Multimodal interactions

- Streaming responses

- Stateful or stateless patterns

- Online and offline agent discovery

- Async-first design for long-running tasks (but works with sync calls too)

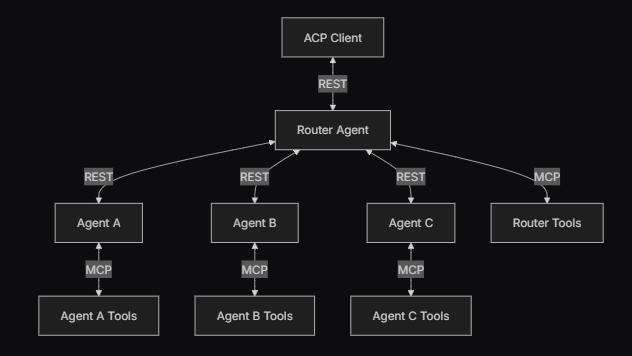

There are two main components:

a) ACP client: used by agents, apps, or services to make requests via the ACP protocol

b) ACP server: hosts one or more agents, receives requests and returns results using ACP. This is how agents are exposed via REST.

architecture flow of Advanced Multi-Agent Orchestration

The protocol is simple enough to use with standard HTTP tools like curl, Postman, or browser requests. But in case you prefer to integrate ACP programmatically, an official Python SDK and Typescript SDK are available.

You can use this command to add the ACP SDK.

uv add acp-sdk🎯 How is ACP different from A2A?

While both support task delegation, streaming, multimodal content, and agent discovery:

- A2A focuses on agent-to-agent collaboration

- ACP extends that to include human and app interaction

- ACP uses REST + OpenAPI + multipart formats

- A2A uses JSON-RPC + SSE

Discovery is done via an Agent Manifest, similar to A2A’s Agent Card.

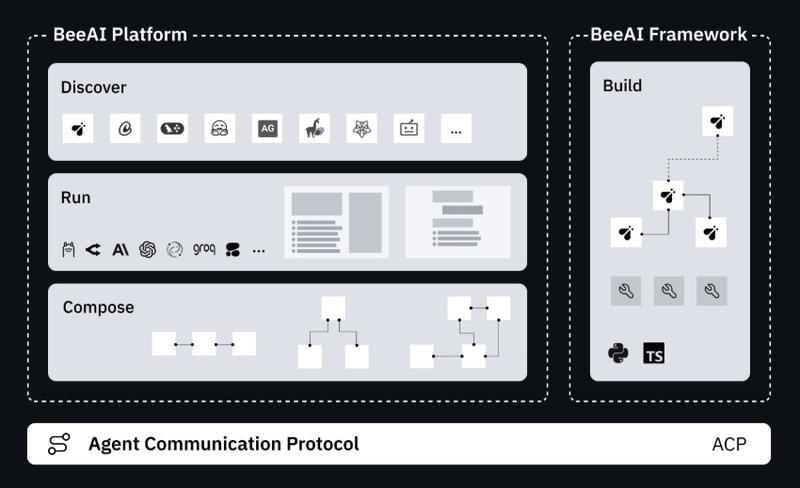

ACP is developed as a Linux Foundation standard, with the BeeAI Platform as a reference implementation.

The protocol remains agnostic to internal implementations and makes sure it serves the broader ecosystem rather than any single vendor.

If you are getting started, check out the DeepLearning.AI short course and the quickstart guide.

Also, check out example agents showing how to use ACP across popular AI frameworks.

5. MCP for Beginners - concepts and fundamentals with practical examples by Microsoft.

This open source curriculum by Microsoft provides a structured learning path with real-world use cases.

It includes practical coding examples across popular languages like C#, Java, JavaScript, TypeScript, and Python.

By following this, you can easily grasp the MCP ecosystem and understand how to build or extend MCP-compliant systems from scratch.

There are some great blogs out there, but they cannot cover all the details.

Here’s the full list of topics covered in the curriculum.

ModuleTopicWhat You will learn00MCP IntroductionWhy MCP matters, real-world use cases ([Gitee][1])01Core ConceptsClient-server architecture, messaging patterns02SecurityThreats, best practices, OAuth2 examples03Getting StartedEnv setup, first server & client in multiple languages3.1–3.3Hands-onBuild server, build client, integrate LLM client3.4–3.6Dev ToolsHook into VS Code, use SSE, and HTTP streaming3.7–3.9Tooling & DeploymentUse AI Toolkit, test, and deploy MCP servers04Practical ImplementationReal multilingual SDK examples & debugging05Advanced TopicsMultimodal workflows, scaling, routing, security, web search, OAuth2, root-contexts5.xDeep DivesAzure integration, multimodal, OAuth2 demo, sampling, routing & scaling strategies06Community ContributionHow to report issues, contribute code/docs07Lessons from Early AdoptersReal-world case studies and deployment insights08Best PracticesResilience, performance, test & fault tolerance09Case StudiesIn-depth architecture breakdowns and examples10Hands-On LabEnd-to-end MCP server with AI Toolkit in VS Code

view rawmcp_beginners.md hosted with ❤ by GitHub

6. 12-Factor Agents - Principles for building reliable LLM applications.

Even as LLMs become more powerful, building reliable AI software still depends on how you engineer around them.

This repo by Dex Horthy distills lessons from building production-grade agents into 12 core principles.

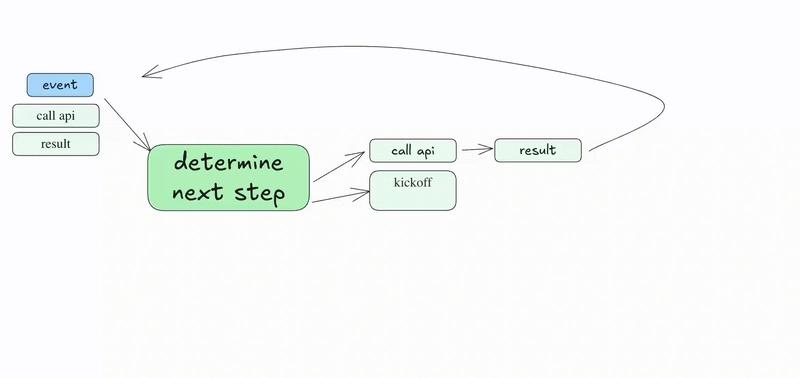

At the heart of every LLM agent is a loop:

- LLM decides the next step using structured tool calls

- Code executes the tool call

- The result is appended to the context window

- Repeat until the next step is determined to be "done"

But at the end of the day, this approach just doesn't work as well as we want it to.

Each factor comes with visual examples, detailed explanations, and code snippets.

Here’s a quick overview of all 12 factors (with links to learn more):

1) Natural Language to Tool Calls : convert user input into structured actions (JSON or function calls) so deterministic code handles execution reliably.

2) Own your prompts: don't outsource your prompt engineering to a framework. Treat prompts like code (version-controlled, tested, easy to update).

3) Own your context window : Only send the important stuff to the model. Cut out anything that isn’t needed to stay fast and avoid hitting limits.

4) Tools are just structured outputs: when your agent uses a tool, have it respond with clean, easy-to-read JSON that your code can understand.

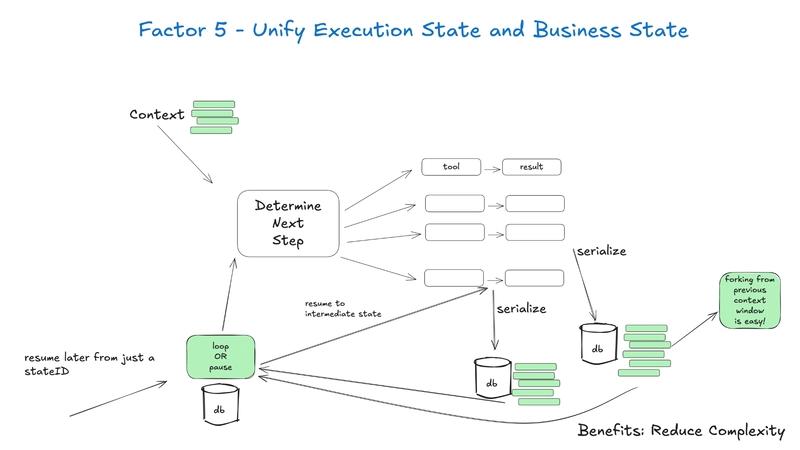

5) Unify execution state and business state: keep the agent’s step-tracking (what it’s doing) and business data (what it’s working on) together in one place. This unified view lets you replay, debug, and monitor the agent’s behavior easily

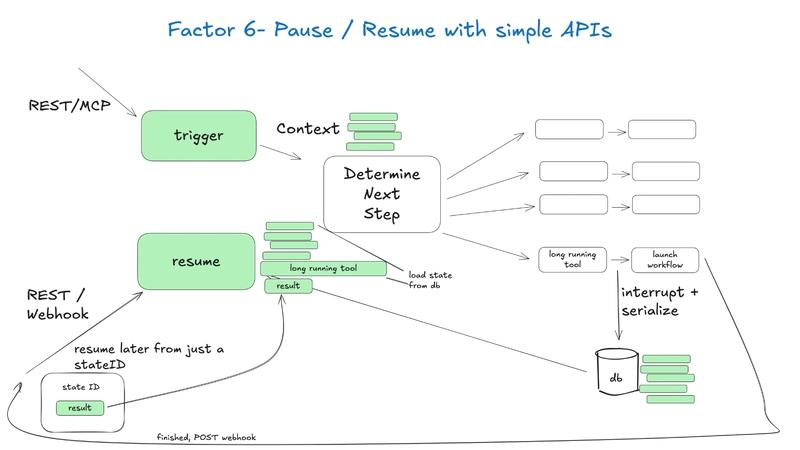

6) Launch/Pause/Resume with simple APIs: agents should be controllable with straightforward APIs for starting, pausing and resuming workflows.

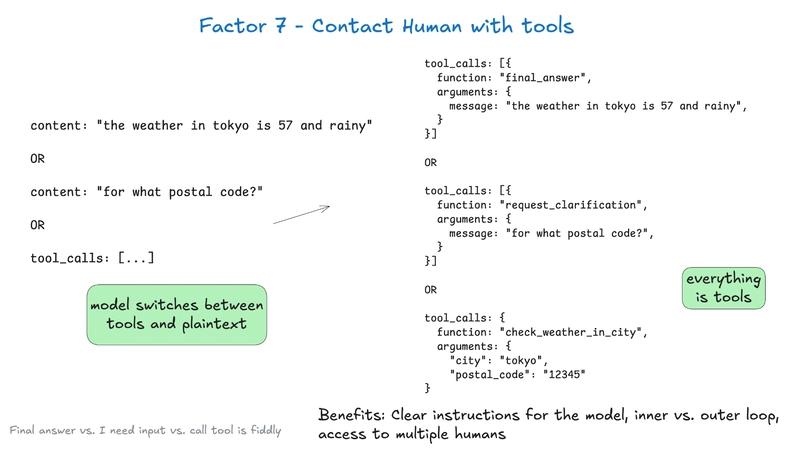

7) Contact humans with tool calls: whenever an agent needs help or input, have it communicate with humans using the same tools (such as notifications, approval requests) as automated tasks.

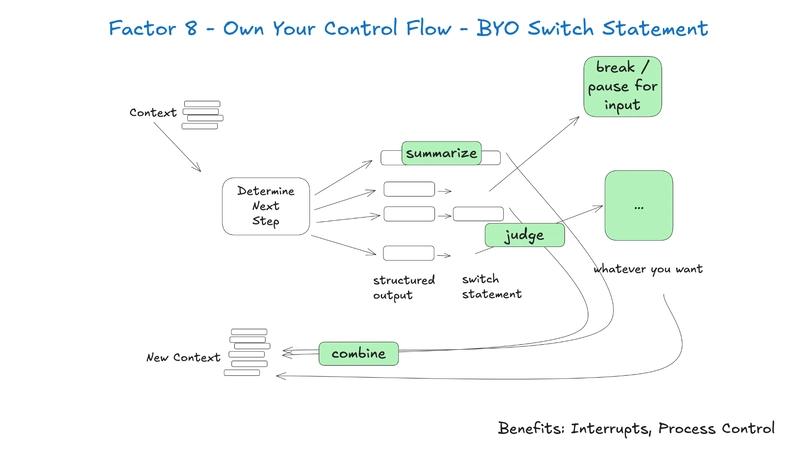

8) Own your control flow: don’t let a tool hide what the agent is doing. Keep the steps clear so you can inspect or change them.

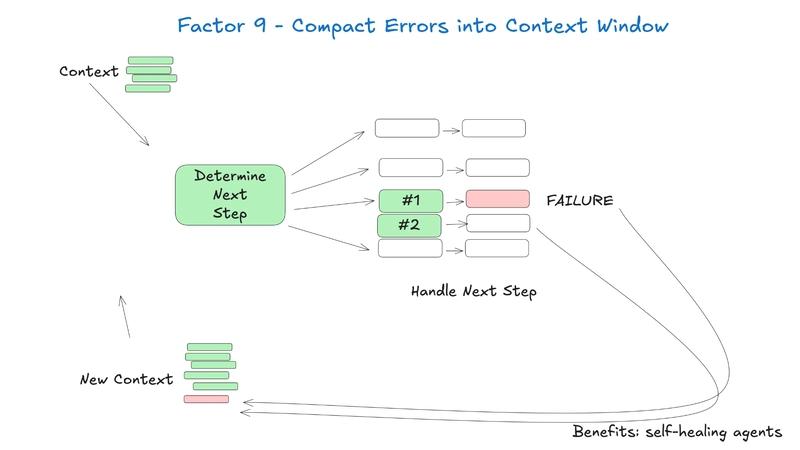

9) Compact Errors into Context Window: summarize errors and include them in the agent’s context window so the model can learn and recover from mistakes. Don’t send full logs, just what helps it fix the issue.

10) Small, Focused Agents: Design agents to do one thing well, following the principle of composability. Small, focused agents are easier to build and test.

11) Trigger from anywhere, meet users where they are: Allow agents to be triggered by events from any source (APIs, cron jobs, user actions) so they fit into different workflows.

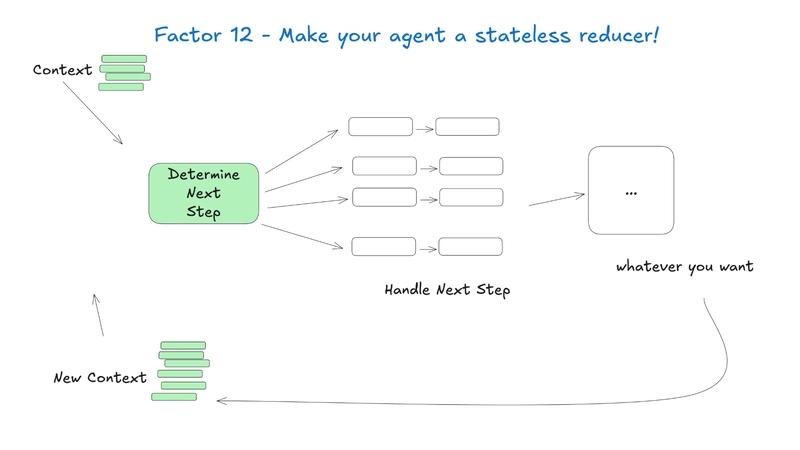

12) Make your agent a stateless reducer: Design agents to process input and return output without keeping internal state between runs. Stateless agents are easier to scale, test, and recover from errors.

This is one of the most valuable repos out there. It covers the history of agent journey (what led us here), what happens when LLMs get smarter and so much more with visual examples.

7. Agents Towards Production - code-first tutorials for building real-world GenAI agents.

If you are curious about how to take your GenAI agent idea from concept to production, this repo is your step-by-step guide. It’s packed with practical, code-driven tutorials designed to help you build production-grade agents.

Each tutorial is self-contained in its own folder, complete with runnable notebooks and code samples, so you can move from concept to working agent in minutes.

You will find coverage of the entire GenAI agent lifecycle, from prototyping to deployment with reusable patterns and real-world blueprints.

Some tutorial highlights:

- GPU Deployment: Deploy agents at scale using Runpod for high-performance inference

- Observability: Add tracing, logs and debugging with LangSmith and Qualifire, so you can monitor agents in real time.

- Multi-Agent Coordination : agent workflows and message exchange using A2A protocol

- Security & Guardrails: Learn to prevent prompt injection and toxic outputs using Qualifire, LlamaFirewall, and example attacks with Apex.

- Memory Systems: Use Redis to set up a hybrid short-term and long-term memory backed by semantic search.

- Web Search Integration: Build agents that fetch and process live web data using Tavily API.

There are also tutorials for fine-tuning, frontends, packaging, and more, all using popular frameworks with best practices.

8. GenAI Agents - GenAI agent implementations, from simple to advanced.

This repository serves as an awesome resource for learning, building, and sharing GenAI agents (community), ranging from simple conversational bots to complex, multi-agent systems.

Every concept is taught through runnable notebooks, with around 40+ projects added via hackathons. It’s practical and super useful.

You will find examples using LangGraph, CrewAI, OpenAI Swarm, LangChain, PydanticAI, and more.

Tutorial categories

A list of categories and what's covered:

- Beginner-friendlyul id="">li id="">Simple Conversational Agent (context/history)

- QA Agent (asking/answering with LLMs)

- Data Analysis Agent (NLP + dataset querying)

You can check the extensive list of implementations in the readme.

9. Awesome LLM Apps - Collection of awesome LLM apps with AI Agents and RAG.

This repo has an awesome collection of Awesome LLM apps built with RAG, AI Agents, Multi-agent Teams, MCP, Autonomous game playing Agents, Voice Agents, and more.

Using models from OpenAI, Anthropic, Google, and open source models like DeepSeek, Qwen, or Llama that you can run locally on your computer.

Some standout projects:

- AI Meme Generator Agent (Browser)

- AI System Architect Agent

- AI Social Media News and Podcast Agent

- AI Competitor Intelligence Agent Team

- Voice RAG Agent (OpenAI SDK)

- AI Blog Search (RAG)

- Local ChatGPT Clone with Memory

- Chat with YouTube Videos

- Chat with Gmail

- AI Travel Planner MCP Agent

If you're building with LLMs, this repo is like an idea vault.

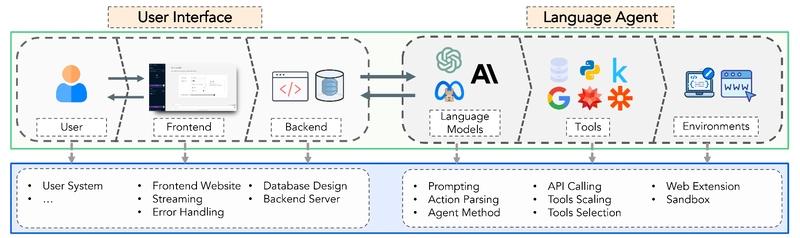

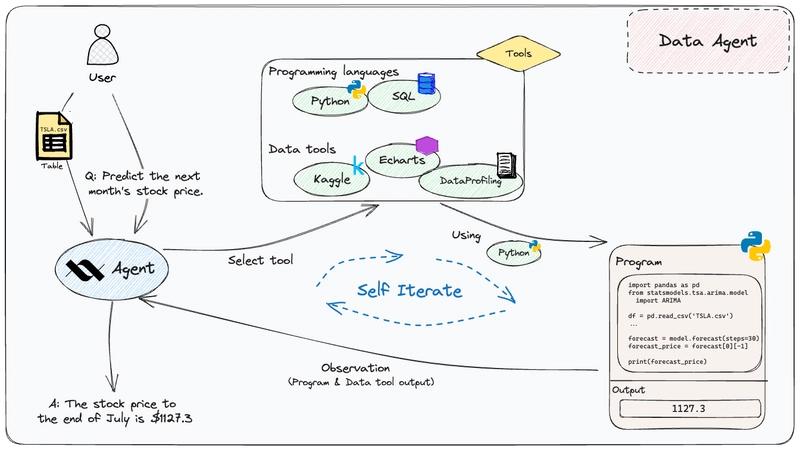

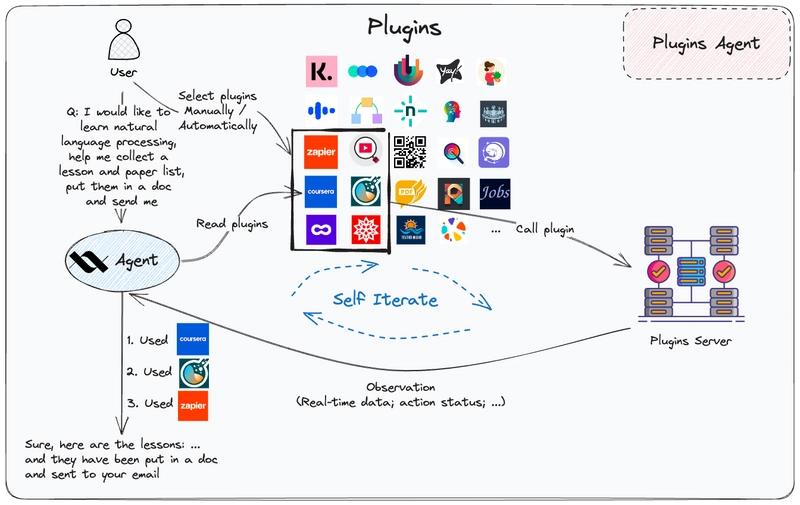

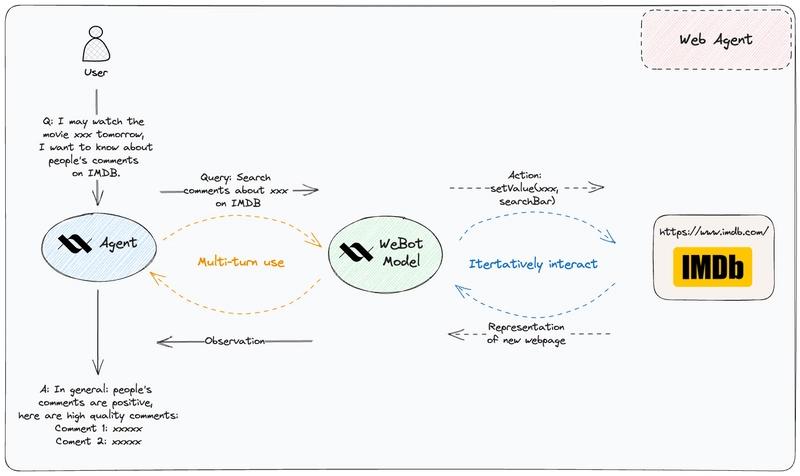

10. OpenAgents - Open Platform for Language Agents in the Wild.

OpenAgents (by xlang‑ai) is an open source platform that makes it easy for everyday users and developers to interact with and build real-world AI agents via a simple web interface.

Here’s a quick look at how it’s designed:

It currently features three powerful agents:

1) Data Agent: lets you run Python or SQL queries, clean data, and create visualizations directly via chat.

2) Plugins Agent: connects to 200+ everyday plugins (like weather, shopping,or Wolfram Alpha), and can smartly pick the right one for the task.

3) Web Agent: uses a Chrome extension to browse sites on your behalf (like filling forms, posting to Twitter, getting directions)

You can check official docs for demo videos and full walkthroughs.

In short, OpenAgents is like ChatGPT Plus but open source and a little customizable, which makes it capable of data analysis, tool use, and autonomous browsing, all built to be easily extended or self-hosted.

11. System Prompts - massive collection of real system prompts, tools & AI configs.

This repo is a massive library of system prompts and configuration files from 15+ real AI products like v0, Cursor, Manus, Same.dev, Lovable, Devin, Replit Agent, Windsurf Agent, VSCode Agent, Dia Browser, Trae AI, Cluely, Xcode & Spawn, and even open source agents like Codex CLI, Bolt, RooCode.

With over 7,500 lines of content, it’s one of the largest and most practical references for anyone interested in how modern AI systems are "instructed" to behave, reason, and interact.

This is incredibly useful if you are:

- Building LLM agents or copilots

- Creating better system/instruction prompts

- Reverse-engineering the logic behind agent behavior

There's also another solid collection (~3k⭐️) you might find useful.

12. 500 AI Agents Projects - collection of AI agent use cases across industries.

If you are curious about real-world AI agent applications, this repo highlights 500+ use cases across industries like healthcare, finance, education, retail, logistics, gaming, and more.

Each use case links to an open source project, making it a hands-on guide rather than just a list of ideas.

Many examples are built using frameworks like CrewAI, AutoGen, LangGraph and Agno. For instance, CrewAI flows include:

- Email Auto Responder

- Meeting Assistant

- Write a Book or Screenplay Writer

- Marketing Strategy Generator

- Landing Page Generator

You will also find unique agent architectures like NVIDIA-integrated LangGraph agents.

It's a great repo to browse and get inspired. It has 1.8k stars on GitHub.

Protocols might sound boring. But they are the reason agents will actually work in the real world.

Frameworks will change. Protocols won’t. So you should take the moment to learn these fundamentals.

If you have any questions, feedback or end up building something cool, please share in the comments.

Have a great day! Until next time :)

Follow CopilotKit & AG-UI on Twitter and say hi!

If you'd like to build something cool, join the AG-UI Discord.