Building Fullstack Agentic Apps with Realtime Data: CopilotKit + Tavily

Tavily: Real-Time Web Access Layer for Agents

Today, we are announcing the CopilotKit+Tavily partnership. Let's dive into why this makes so much sense.

Tavily powers the Internet of agents with fast, secure, and reliable web access APIs, reducing hallucinations and making agents more powerful.

Tavily gives your assistant the ability to:

- Search the live web: Fetch current, relevant information-i.e., news, regulations, product updates

- Crawl and extract content: Go deeper than snippets and pull structured data from full pages

- Provide sources and citations: Every answer comes with provenance, so users can verify

- Keep data private: Zero data retention, optional PII redaction, and prompt-injection guardrails

You get all this through an API with SDKs for Python and JavaScript, and integrated into some agent frameworks such as LangChain and LlamaIndex. No need to reinvent search or scraping infrastructure.

CopilotKit: Making Agents Interactive

CopilotKit solves the other half of the equation: bringing the empowered agent to the user.

Why, because agents aren’t just backend workers anymore. To feel useful, they need to be part of the UI itself.

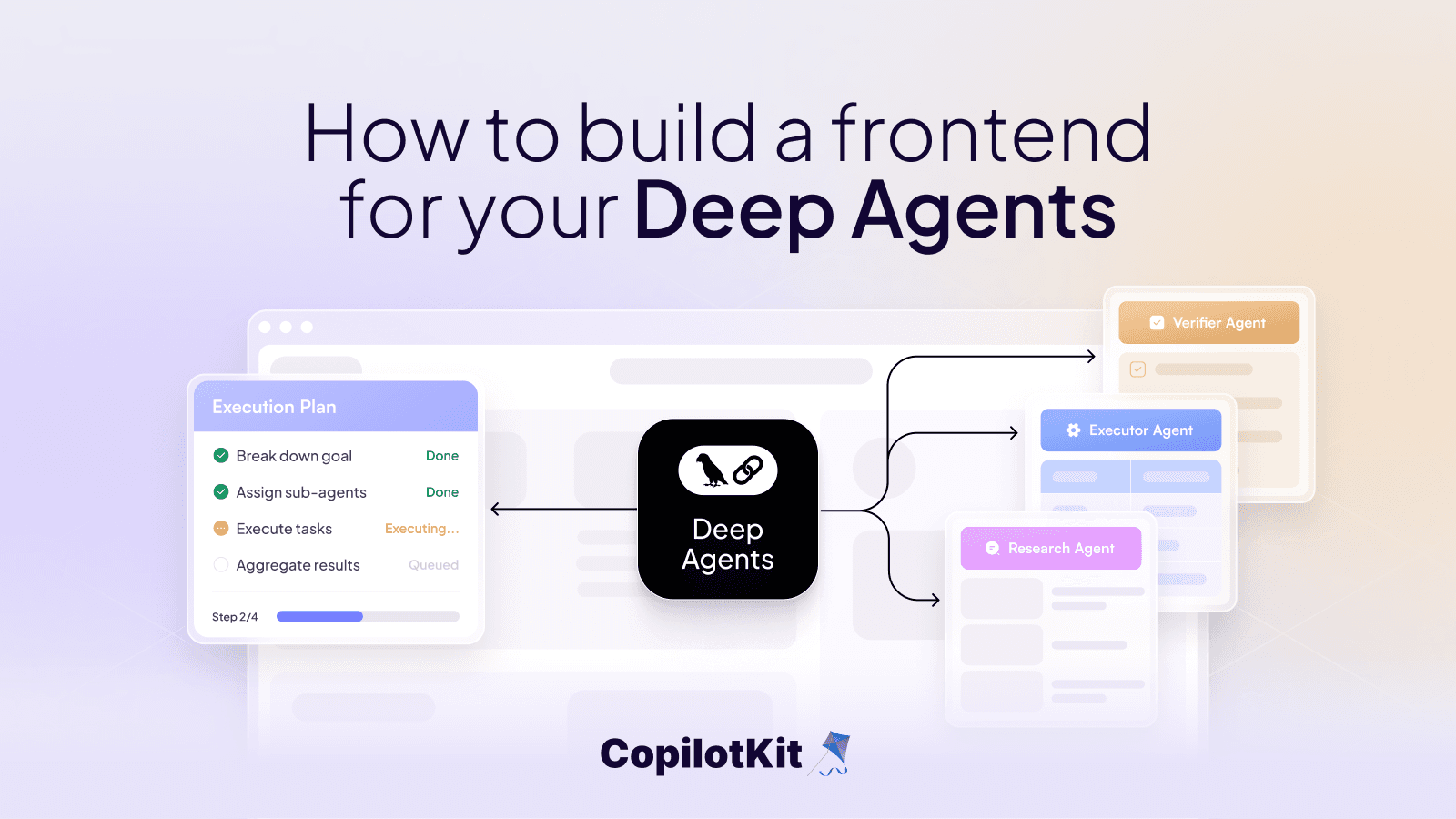

This is the role of CopilotKit. It lets you embed agent intelligence directly into your React components, so your app isn’t just a “chat with an LLM”. It’s agentic and collaborative which brings features such as:

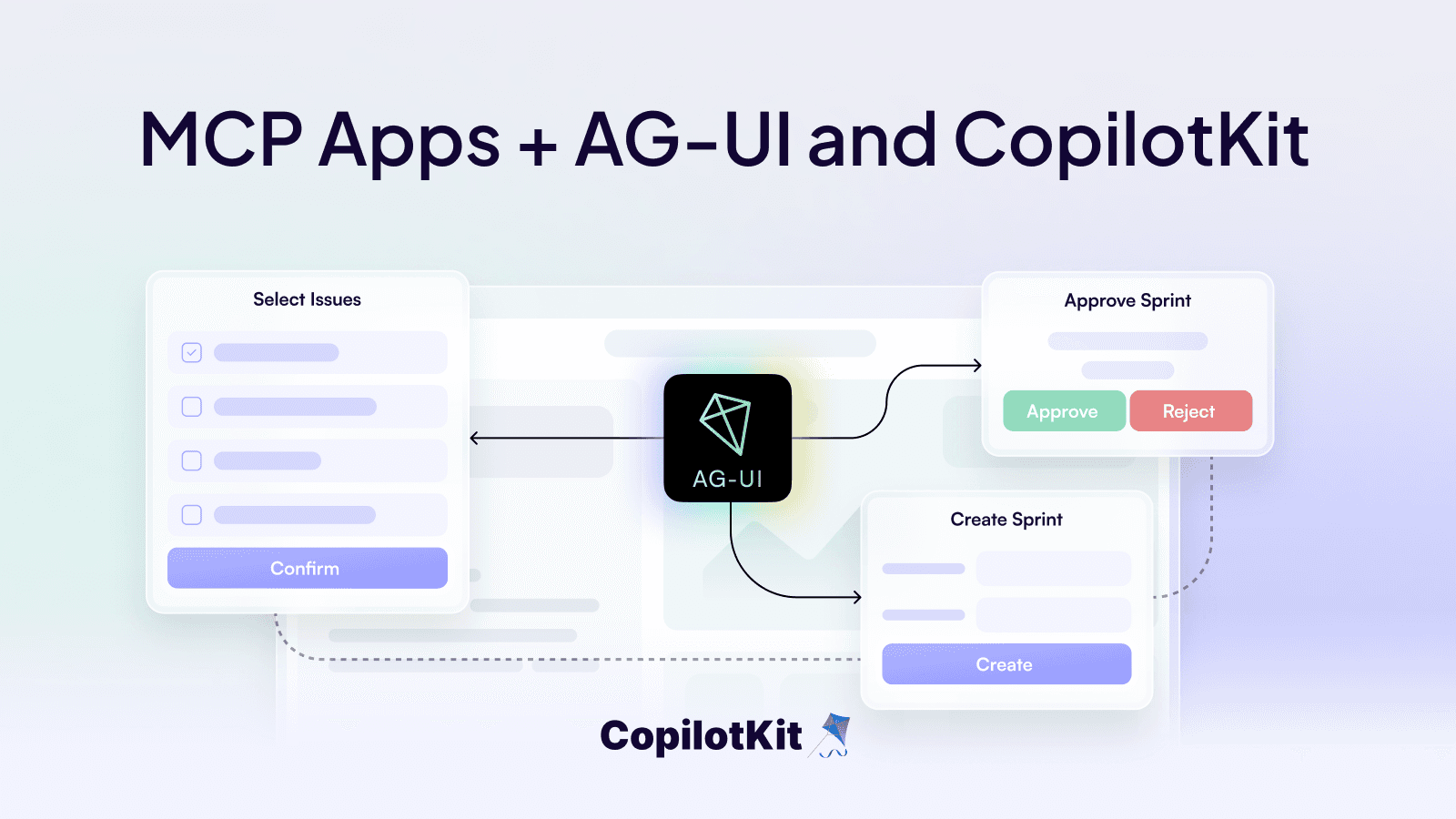

- UI integration via AG-UI: Assistants don't just chat, they turn your frontend interactive

- Fullstack context: Copilots can see app state, backend data, tools, and user actions

- Human-in-the-loop: Developers define how AI suggestions appear and when users approve workflows

Let’s look at a typical pattern:

- User asks: “Show me today’s top tech news.”

- Agent calls —> Tavily.

- Results are streamed into a UI component, showing each article inline.

- User clicks one —> agent expands it, summarizes, or fetches related papers.

Instead of copy-pasting from a chatbox, the agent is driving real interactivity in the app.

Together, Tavily and CopilotKit transform a basic LLM into a context-aware assistant that can fetch current information and act on it within your application.

Play with a live app using CopilotKit, LangGraph, and Tavily here.

Real-Time + Agentic UI = Interactive UI

This is what is unique. By combining real time data (Tavily) and Agentic UI (CopilotKit), you close the feedback loop:

- A CopilotKit app can call Tavily for live data and present it directly in the UI.

- Agentic UI patterns to follow:

- Inline updates: agent retrieves data via Tavily and populates UI components dynamically.

- Multi-step flows: User asks a question → Agent calls Tavily → UI updates live → User interacts → Agent continues the loop

- This creates a closed loop between User <> Agent, and the live data.

Why This Is Agentic

- Real time retrieval prevents hallucinations

- CopilotKit provides interactivity (Headless or Chat)

- Together, they enable agentic experiences where the user and agent collaborate live on fresh data.

Get Started

- Tavily Documentation- explore search, crawl, and extract APIs

- Getting Started Project - build your first in-app research assistant

Turn your app into more than a demo. Build a copilot that belongs in production.