CoAgents: Connecting AI Agents to Realtime Application Context

Coagent: LangGraph and CopilotKit streaming an AI agent state

We are quickly learning (and re-learning) that agents perform best when they work alongside people. It's far easier to get an agent to perform 70%, and even 80% and 90% of a given task, than to perform the given task fully autonomously (see: cars).

However, to facilitate seamless human-AI collaboration, we must connect AI agents to the real-time application context: agents should be able to see what the end-user sees and to do what the end-user can do in the context of applications.

End-users should be able to monitor agents' executions - and bring them back on track when they go off the rails.

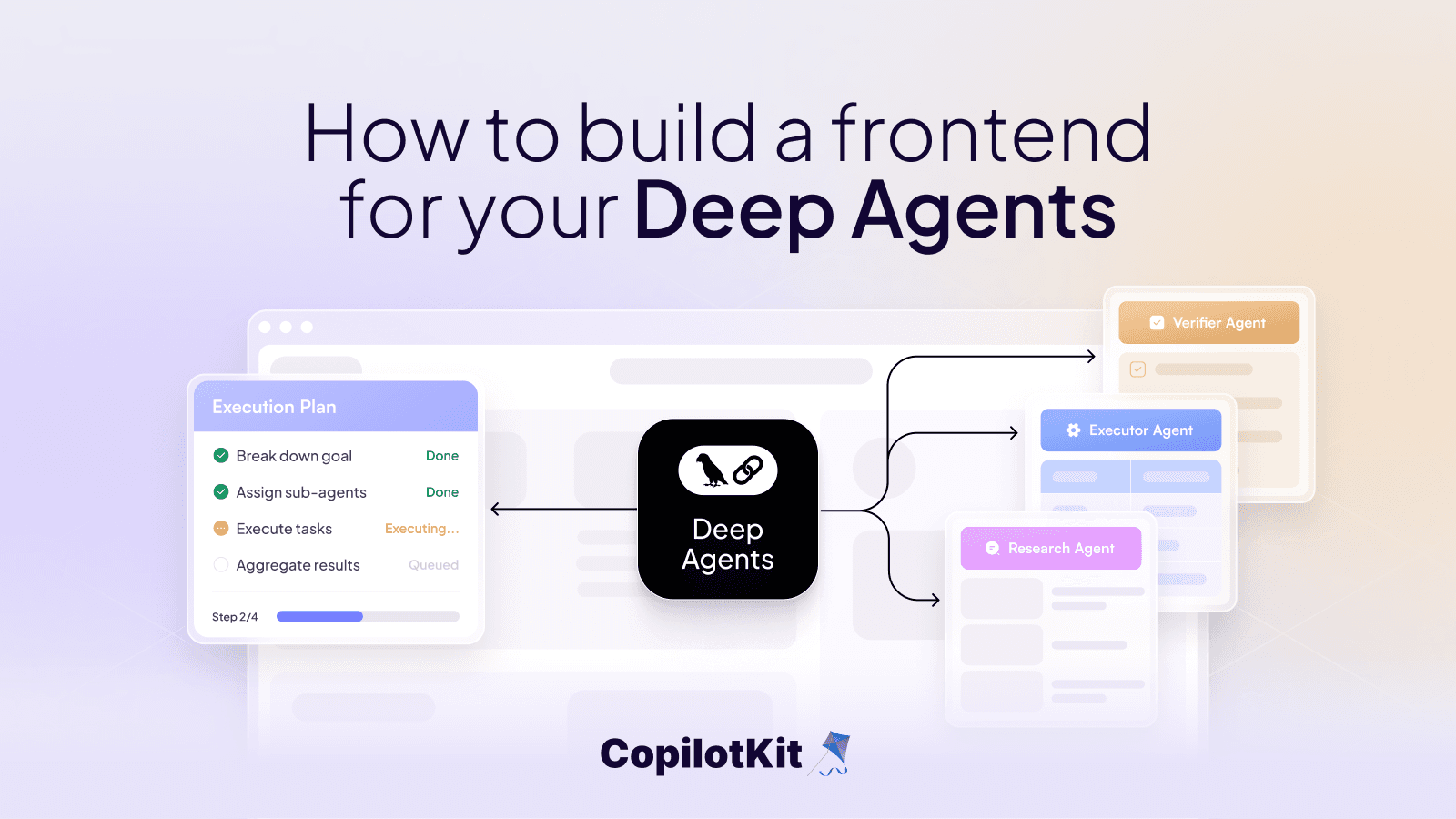

This is where CoAgents come into play: agents that expose their operations to end-users using custom UI that is understandable to end-users, and where end-users can steer back on track should they “go off the rails”.

Human-in-the-loop is essential for creating effective and valuable AI agents. By integrating CoAgents with LangGraph, we elevate in-app human-in-the-loop agents to a new level. This is the next focus for CopilotKit—simplifying the development process for anyone looking to harness this technology. We’re already building the infrastructure for Coagents today and want to share our process and learnings as we go. Want early access to this technology? Sign up here

A framework for building human-in-the-loop agents

CopilotKit is the simplest way to integrate production-ready copilots into any product with both open-source tooling and an enterprise-ready cloud platform. When we look at Coagents, there are a few critical use cases that we see crop up most frequently:

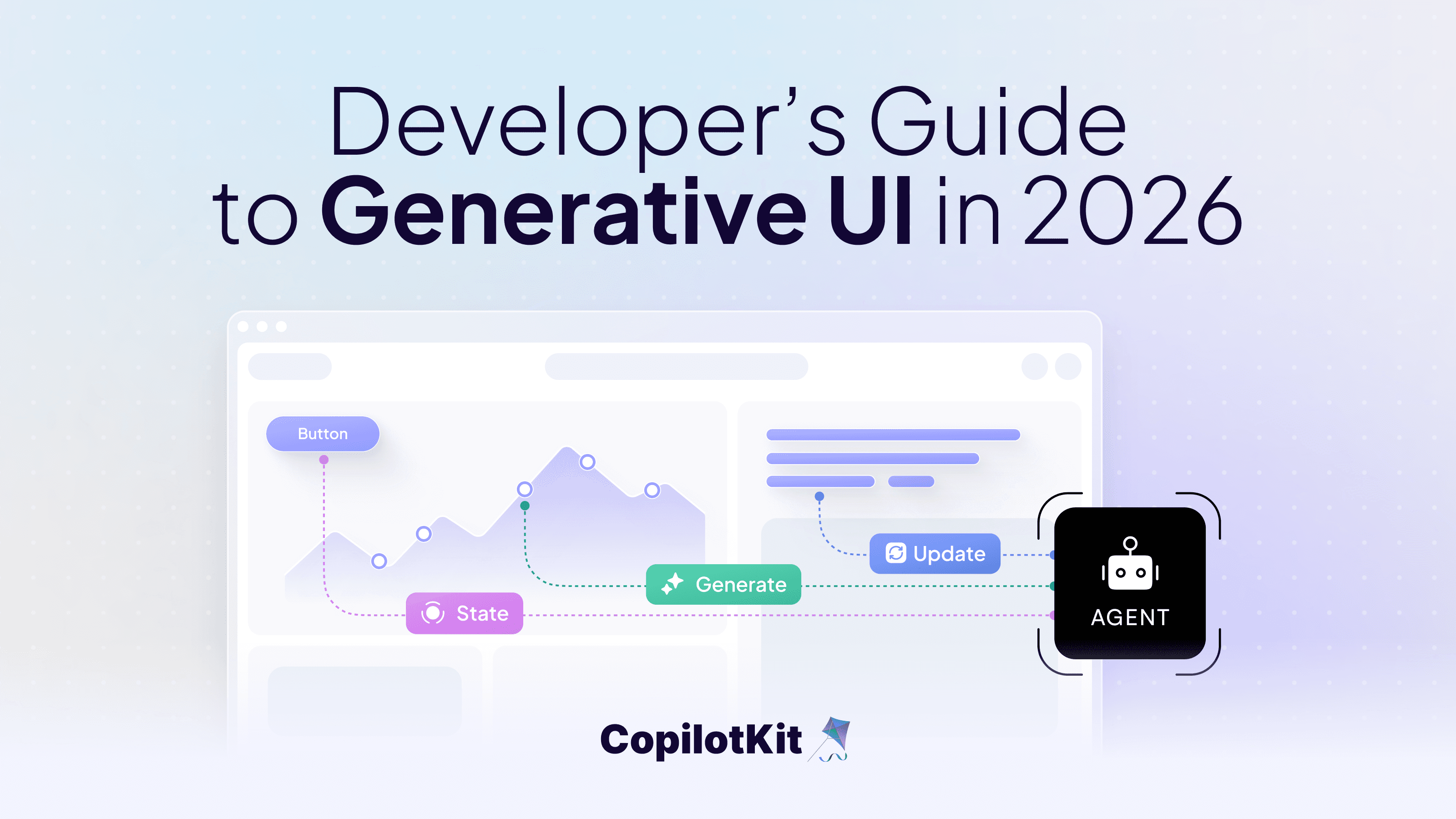

- Streaming an intermediate agent state - provides insight into the agent's work in progress, rendering any component based on the LangGraph node that it’s processing.

- Shared state between an agent, a user, and the app - allows an agent and an end-user to collaborate over the same data via bi-directional syncing between the app and agent state.

- Agent-led Q&A - Allow agents to intentionally ask a user question in conversation and app context with support for response/follow-up via the UI. “Your planned trip seems particularly expensive this week, would you like to reschedule for next week?”

At CopilotKit, we are dedicated to creating a platform that simplifies the development of copilots by providing developers with the most powerful tools at their disposal.

Our next step in this journey is to enable this through Coagents, beginning with the introduction of the intermediate agent state.

Ready to walk through some of this in action?

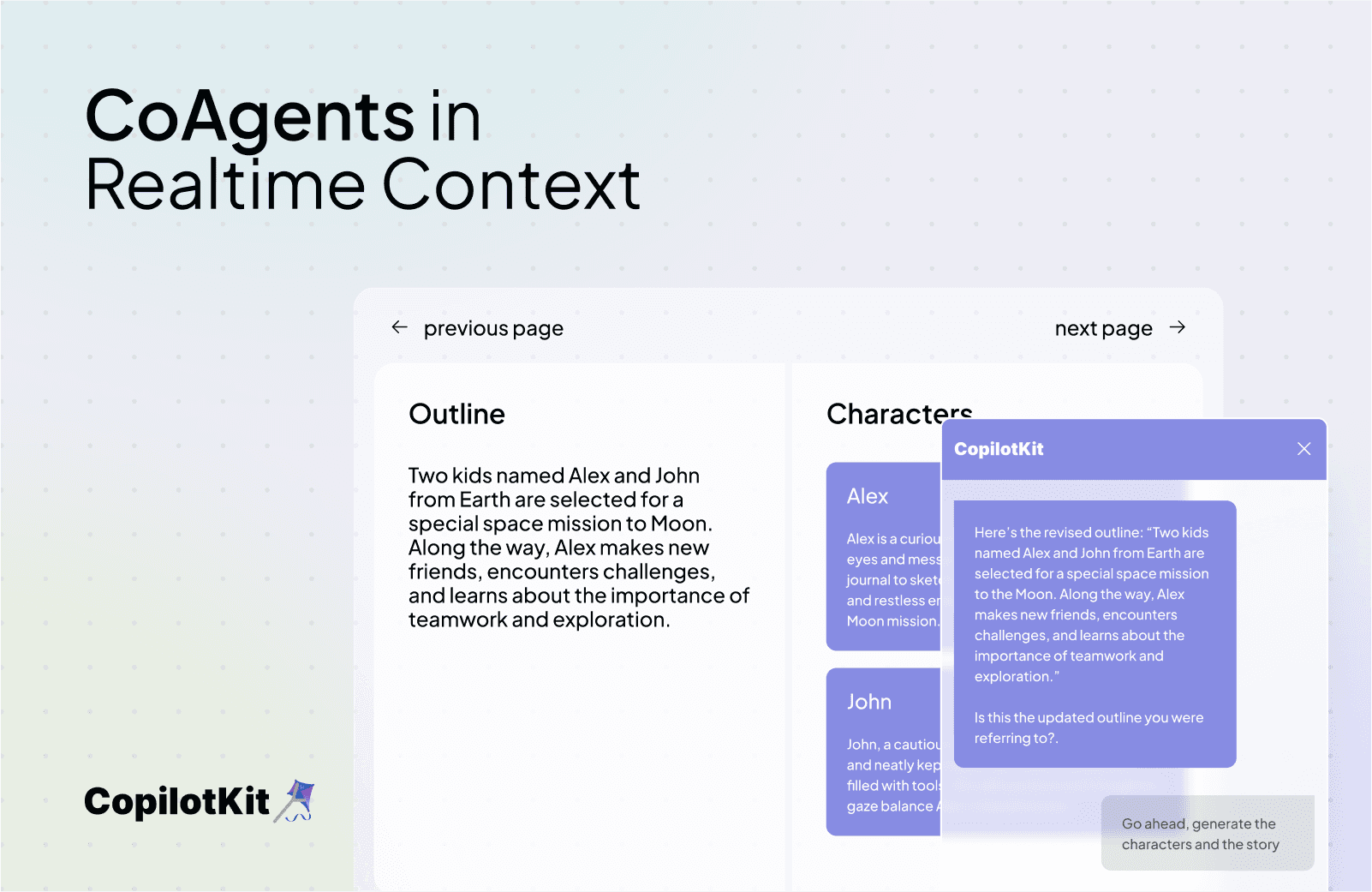

Build an AI Coagent children's book with LangGraph and CopilotKit

Let's see what a streaming agent state looks like in our storybook app. We've built an AI agent that will help you write a children's storybook where the agent can chat with the user to develop a story outline, generate characters, outline chapters, and generate image descriptions that we'll use to create the images using Dall-E 3.

This first version of Coagents with support for LangGraph will be released fully open source - sign up for first access here.