The Agent landscape can easily feel like alphabet soup (LLM's, RAG, MCP, A2A, AG-UI, LangGraph, Tavily, etc.)

How do you make sense of it all?

We've put together this guide for those trying to understand agents as you onboard yourself into the agent ecosystem.

Together, let's simplify everything in hopes that this useful guide will help you navigate through the AI agent ecosystem.

What are AI Agents?

We like to think of them like mini-coworkers embedded in your product that have the ability to complete complex tasks.

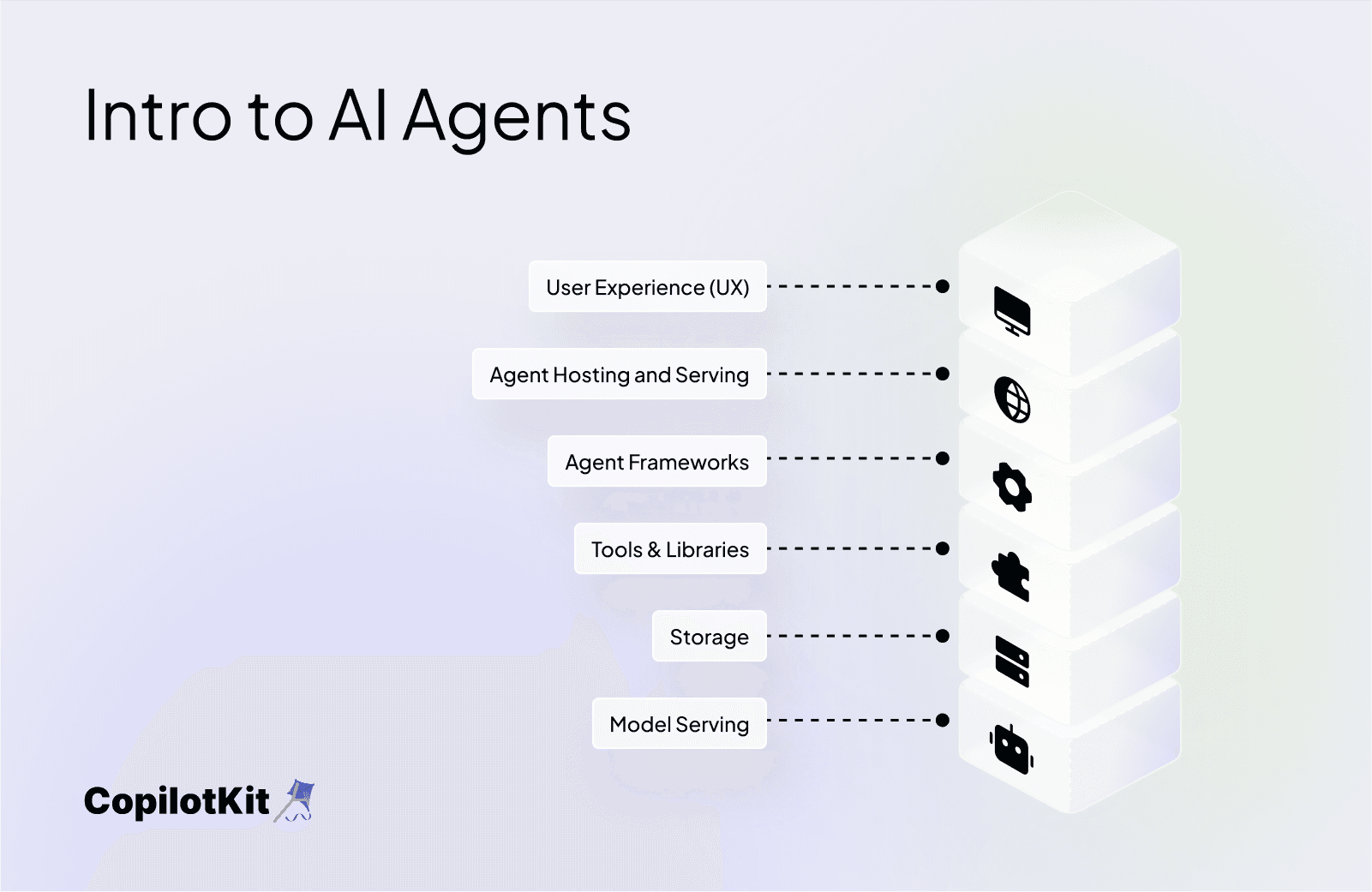

Visualizing the layers, and where agents fit in might be one of the most important factors in understanding how all of the pieces fit together.

Consider four layers:

- LLM: This is the brain of the agent

- Frameworks: Agent memory, planning and recursion

- Tools: This extends the agents capabilities via API's and services

- Application or UX layer: This is where users interact with agents

Think of a triangle with three points, as the agent stack.

- MCP: Giving agents contexts and tools

- A2A or ACP: Agents communicating with agents

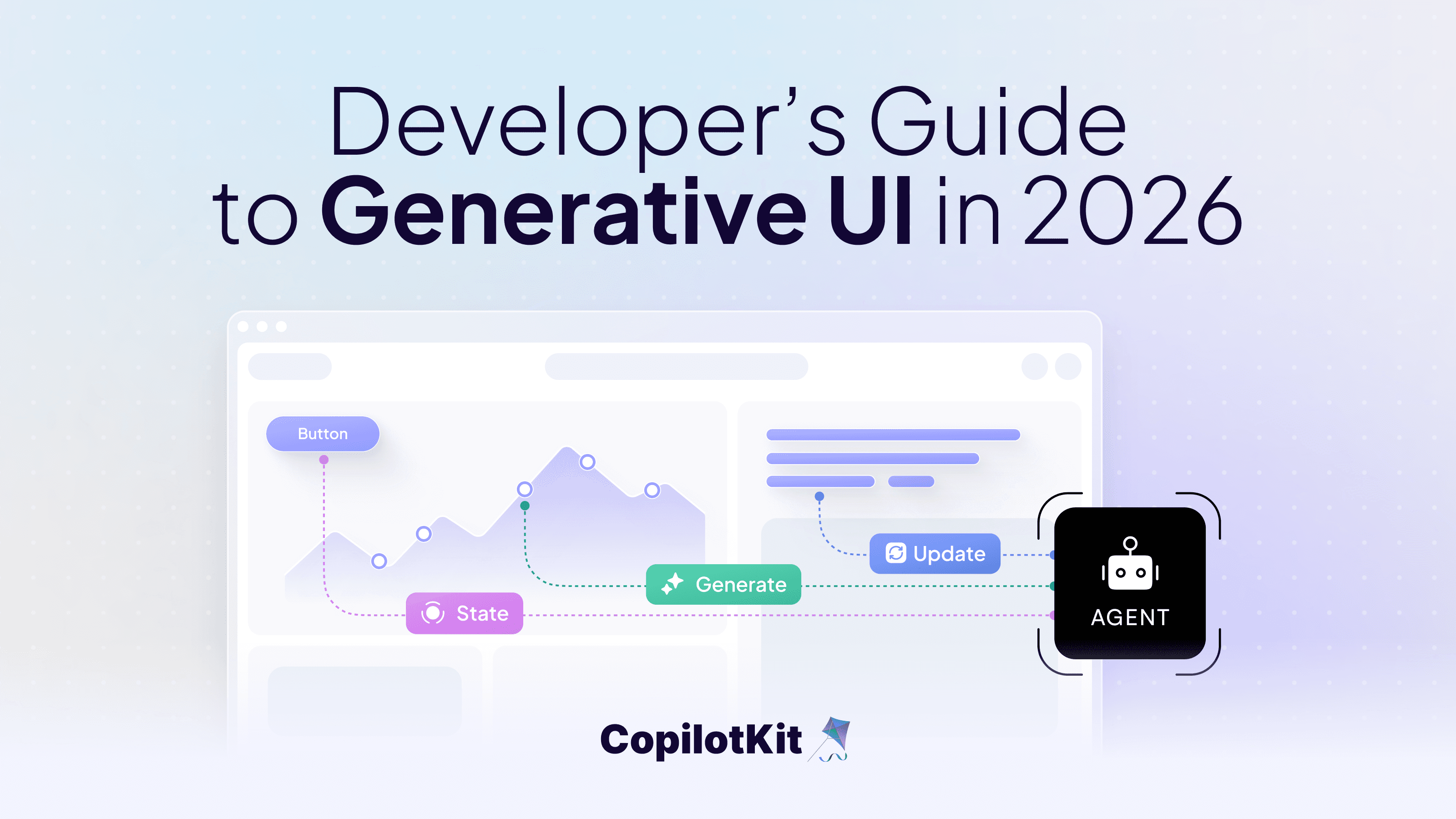

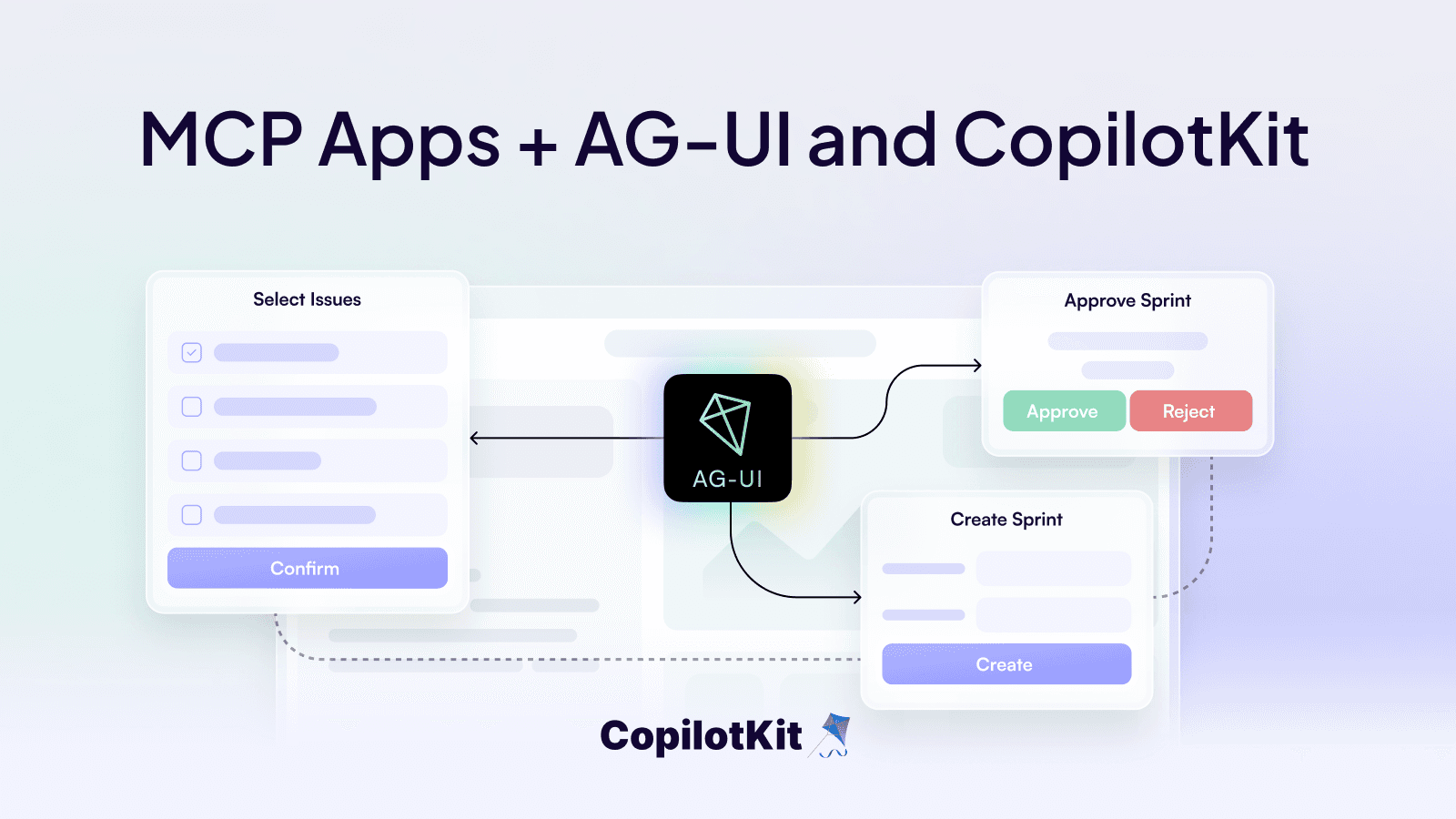

- AG-UI: Bringing agents to the frontend

What is AG-UI?

AG-UI takes agents from backend automation to user-facing applications and brings structure, interactivity, standardization, and reactivity to the agent-powered frontend.

The next question is, what can you build with agents?

Backend Automations:

- Backend Automations: Trigger agents via API or cron for background workflows

- Replace scripts with dynamic decision making logic

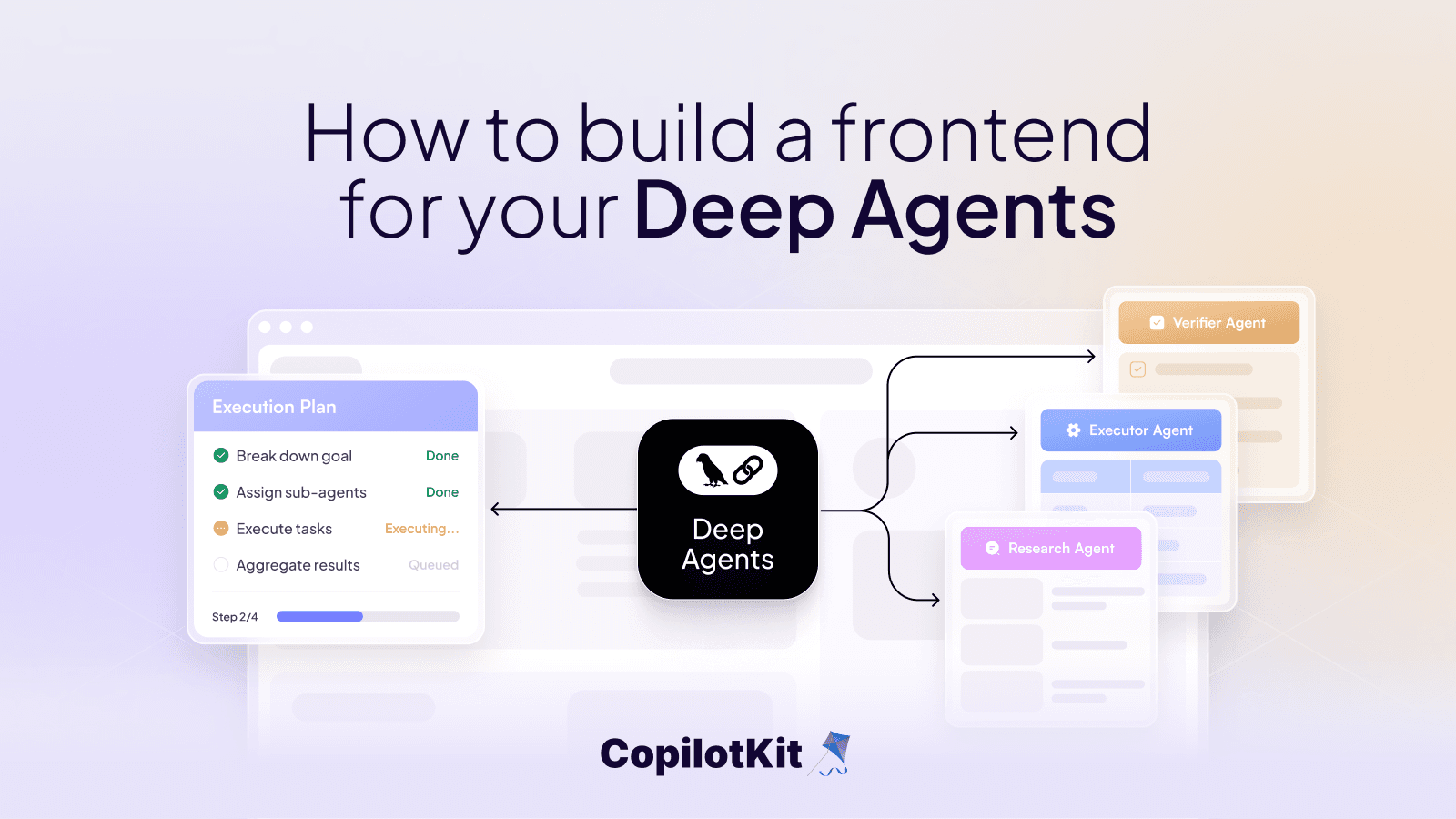

Fullstack Agentic Applications:

- Agents embedded directly in your UI, interacting live with users

- Real-time reasoning + frontend control via AG-UI or similar protocol

Concepts Worth Mastering with four examples:

Retrieval-Augmented Generation (RAG):

- An AI framework that combines the strengths of information retrieval and generative language models.

Context Engineering:

- Designing systems that decide what information an AI model sees before it generates a response.

Prompt Engineering:

- Designing input prompts to effectively interact with AI models, especially LLMs like GPT.

Vibe Coding:

- Using AI to generate, refine, and debug code.

The more you master these layers, the more control you have over agentic behavior!

Get your hands on these tools and start building!

Let's answer why the agent stack matters.

Autonomous agents will:

- Replace entire SaaS workflows

- Supercharge dev productivity

- Build entirely new UX paradigms

The agent stack and ecosystem turns raw AI into usable, interactive products by connecting reasoning (LLMs), memory and planning (frameworks), actions (tools), and user interfaces (apps).